Difference between revisions of "AI Agents"

KevinYager (talk | contribs) (→Inter-agent communications) |

KevinYager (talk | contribs) (→Iterative reasoning via graphs) |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 50: | Line 50: | ||

* [https://eugeneyan.com/writing/llm-evaluators/ Evaluating the Effectiveness of LLM-Evaluators (aka LLM-as-Judge)] | * [https://eugeneyan.com/writing/llm-evaluators/ Evaluating the Effectiveness of LLM-Evaluators (aka LLM-as-Judge)] | ||

* 2024-10: [https://arxiv.org/abs/2410.10934 Agent-as-a-Judge: Evaluate Agents with Agents] | * 2024-10: [https://arxiv.org/abs/2410.10934 Agent-as-a-Judge: Evaluate Agents with Agents] | ||

| + | * 2024-11: [https://arxiv.org/abs/2411.15594 A Survey on LLM-as-a-Judge] | ||

=Advanced Workflows= | =Advanced Workflows= | ||

| Line 146: | Line 147: | ||

* 2024-11: [https://x.com/deepseek_ai/status/1859200141355536422 DeepSeek-R1-Lite-Preview reasoning model] | * 2024-11: [https://x.com/deepseek_ai/status/1859200141355536422 DeepSeek-R1-Lite-Preview reasoning model] | ||

* 2024-11: [https://arxiv.org/abs/2411.14405 Marco-o1: Towards Open Reasoning Models for Open-Ended Solutions] | * 2024-11: [https://arxiv.org/abs/2411.14405 Marco-o1: Towards Open Reasoning Models for Open-Ended Solutions] | ||

| + | * 2024-11: [https://huggingface.co/papers/2411.16489 O1 Replication Journey -- Part 2: Surpassing O1-preview through Simple Distillation, Big Progress or Bitter Lesson?] | ||

===Scaling=== | ===Scaling=== | ||

| Line 203: | Line 205: | ||

===Iterative reasoning via graphs=== | ===Iterative reasoning via graphs=== | ||

* 2023-08: [https://arxiv.org/abs/2308.09687 Graph of Thoughts: Solving Elaborate Problems with Large Language Models] | * 2023-08: [https://arxiv.org/abs/2308.09687 Graph of Thoughts: Solving Elaborate Problems with Large Language Models] | ||

| + | * 2023-10: [https://arxiv.org/abs/2310.04363 Amortizing intractable inference in large language models] ([https://github.com/GFNOrg/gfn-lm-tuning code]) | ||

* 2024-09: [https://arxiv.org/abs/2409.10038 On the Diagram of Thought]: Iterative reasoning as a directed acyclic graph (DAG) | * 2024-09: [https://arxiv.org/abs/2409.10038 On the Diagram of Thought]: Iterative reasoning as a directed acyclic graph (DAG) | ||

Latest revision as of 15:51, 26 November 2024

Contents

- 1 Reviews & Perspectives

- 2 AI Assistants

- 3 Advanced Workflows

- 4 Corporate AI Agent Ventures

- 5 Increasing AI Agent Intelligence

- 5.1 Proactive Search

- 5.2 Inference Time Compute

- 5.2.1 Methods

- 5.2.2 In context learning (ICL), search, and other inference-time methods

- 5.2.3 Inference-time Sampling

- 5.2.4 Inference-time Gradient

- 5.2.5 Self-prompting

- 5.2.6 In-context thought

- 5.2.7 Naive multi-LLM (verification, majority voting, best-of-N, etc.)

- 5.2.8 Multi-LLM (multiple comparisons, branching, etc.)

- 5.2.9 Iteration (e.g. neural-like layered blocks)

- 5.2.10 Iterative reasoning via graphs

- 5.2.11 Monte Carlo Tree Search (MCTS)

- 5.2.12 Other Search

- 5.2.13 Scaling

- 5.2.14 Theory

- 5.2.15 Expending compute works

- 5.2.16 Code for Inference-time Compute

- 5.3 Memory

- 5.4 Tool Use

- 5.5 Multi-agent Effort (and Emergent Intelligence)

- 5.6 ML-like Optimization of LLM Setup

- 6 Multi-agent orchestration

- 7 Optimization

- 8 See Also

Reviews & Perspectives

Published

- 2024-04: LLM Reasoners: New Evaluation, Library, and Analysis of Step-by-Step Reasoning with Large Language Models (code)

- 2024-08: From LLMs to LLM-based Agents for Software Engineering: A Survey of Current, Challenges and Future

- 2024-09: Towards a Science Exocortex

- 2024-09: Large Language Model-Based Agents for Software Engineering: A Survey

- 2024-09: Agents in Software Engineering: Survey, Landscape, and Vision

Continually updating

- OpenThought - System 2 Research Links

- Awesome LLM Strawberry (OpenAI o1): Collection of research papers & blogs for OpenAI Strawberry(o1) and Reasoning

Analysis/Opinions

- LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks

- Cutting AI Assistant Costs by Up to 77.8%: The Power of Enhancing LLMs with Business Logic

AI Assistants

Components of AI Assistants

Information Retrieval

- See also RAG.

- 2024-10: Agentic Information Retrieval

Open-source

- Khoj (code): self-hostable AI assistant

- RAGapp: Agentic RAG for enterprise

- STORM: Synthesis of Topic Outlines through Retrieval and Multi-perspective Question Asking

- Can write (e.g.) Wikipedia-style articles

- code

- Preprint: Assisting in Writing Wikipedia-like Articles From Scratch with Large Language Models

Personalities/Personas

- 2023-10: Generative Agents: Interactive Simulacra of Human Behavior

- 2024-11: Microsoft TinyTroupe 🤠🤓🥸🧐: LLM-powered multiagent persona simulation for imagination enhancement and business insights

- 2024-11: Generative Agent Simulations of 1,000 People (code)

Specific Uses for AI Assistants

Computer Use

Science Agents

See Science Agents.

LLM-as-judge

- List of papers.

- LLM Evaluation doesn't need to be complicated

- Evaluating the Effectiveness of LLM-Evaluators (aka LLM-as-Judge)

- 2024-10: Agent-as-a-Judge: Evaluate Agents with Agents

- 2024-11: A Survey on LLM-as-a-Judge

Advanced Workflows

- Salesforce DEI: meta-system that leverages a diversity of SWE agents

- Sakana AI: AI Scientist

- SciAgents: Automating scientific discovery through multi-agent intelligent graph reasoning

Software Development Workflows

Several paradigms of AI-assisted coding have arisen:

- Manual, human driven

- AI-aided through chat/dialogue, where the human asks for code and then copies it into the project

- API calls to an LLM, which generates code and inserts the file into the project

- LLM-integration into the IDE

- AI-assisted IDE, where the AI generates and manages the dev environment

- Replit

- Aider (code): Pair programming on commandline

- Pythagora

- StackBlitz bolt.new

- Cline (formerly Claude Dev)

- Prompt-to-product

- Semi-autonomous software engineer agents

For a review of the current state of software-engineering agentic approaches, see:

- 2024-08: From LLMs to LLM-based Agents for Software Engineering: A Survey of Current, Challenges and Future

- 2024-09: Large Language Model-Based Agents for Software Engineering: A Survey

- 2024-09: Agents in Software Engineering: Survey, Landscape, and Vision

Corporate AI Agent Ventures

Mundane Workflows and Capabilities

- Payman AI: AI to Human platform that allows AI to pay people for what it needs

- VoiceFlow: Build customer experiences with AI

- Mistral AI: genAI applications

- Taskade: Task/milestone software with AI agent workflows

- Covalent: Building a Multi-Agent Prompt Refining Application

Inference-compute Reasoning

Agentic Systems

- Topology AI

- Cognition AI: Devin software engineer (14% SWE-Agent)

- Honeycomb (22% SWE-Agent)

Increasing AI Agent Intelligence

Proactive Search

Compute expended after training, but before inference.

Training Data (Data Refinement, Synthetic Data)

- C.f. image datasets:

- 2024-09: Programming Every Example: Lifting Pre-training Data Quality like Experts at Scale

- 2024-10: Data Cleaning Using Large Language Models

- Updating list of links: Synthetic Data of LLMs, by LLMs, for LLMs

Generate consistent plans/thoughts

- 2024-08: Mutual Reasoning Makes Smaller LLMs Stronger Problem-Solvers (code)

- (Microsoft) rStar is a self-play mutual reasoning approach. A small model adds to MCTS using some defined reasoning heuristics. Mutually consistent trajectories can be emphasized.

- 2024-09: Self-Harmonized Chain of Thought

- Produce refined chain-of-thought style solutions/prompts for diverse problems. Given a large set of problems/questions, first aggregated semantically, then apply zero-shot chain-of-thought to each problem. Then cross-pollinate between proposed solutions to similar problems, looking for refined and generalize solutions.

Sampling

- 2024-11: Language Models are Hidden Reasoners: Unlocking Latent Reasoning Capabilities via Self-Rewarding (code)

Automated prompt generation

Distill inference-time-compute into model

- 2023-10: Reflection-Tuning: Data Recycling Improves LLM Instruction-Tuning (U. Maryland, Adobe)

- 2023-11: Implicit Chain of Thought Reasoning via Knowledge Distillation (Harvard, Microsoft, Hopkins)

- 2024-02: Grandmaster-Level Chess Without Search (Google DeepMind)

- 2024-07: Fine-Tuning with Divergent Chains of Thought Boosts Reasoning Through Self-Correction in Language Models

- 2024-07: BOND: Aligning LLMs with Best-of-N Distillation

- 2024-09: Training Language Models to Self-Correct via Reinforcement Learning (Google DeepMind)

- 2024-10: Thinking LLMs: General Instruction Following with Thought Generation

- 2024-10: Dualformer: Controllable Fast and Slow Thinking by Learning with Randomized Reasoning Traces

CoT reasoning model

- 2024-09: OpenAI o1

- 2024-10: O1 Replication Journey: A Strategic Progress Report – Part 1 (code): Attempt by Walnut Plan to reproduce o1-like in-context reasoning

- 2024-11: DeepSeek-R1-Lite-Preview reasoning model

- 2024-11: Marco-o1: Towards Open Reasoning Models for Open-Ended Solutions

- 2024-11: O1 Replication Journey -- Part 2: Surpassing O1-preview through Simple Distillation, Big Progress or Bitter Lesson?

Scaling

- 2024-08: Smaller, Weaker, Yet Better: Training LLM Reasoners via Compute-Optimal Sampling (Google DeepMind)

- 2024-11: Scaling Laws for Pre-training Agents and World Models

Inference Time Compute

Methods

In context learning (ICL), search, and other inference-time methods

- 2023-03: Reflexion: Language Agents with Verbal Reinforcement Learning

- 2023-05: VOYAGER: An Open-Ended Embodied Agent with Large Language Models

- 2024-04: Many-Shot In-Context Learning

- 2024-08: Automated Design of Agentic Systems

- 2024-09: Planning In Natural Language Improves LLM Search For Code Generation

Inference-time Sampling

- 2024-10: entropix: Entropy Based Sampling and Parallel CoT Decoding

- 2024-10: TreeBoN: Enhancing Inference-Time Alignment with Speculative Tree-Search and Best-of-N Sampling

- 2024-11: Turning Up the Heat: Min-p Sampling for Creative and Coherent LLM Outputs

Inference-time Gradient

Self-prompting

- 2023-05: Reprompting: Automated Chain-of-Thought Prompt Inference Through Gibbs Sampling

- 2023-11: Rephrase and Respond: Let Large Language Models Ask Better Questions for Themselves

In-context thought

- 2022-01: Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (Google Brain)

- 2023-05: Tree of Thoughts: Deliberate Problem Solving with Large Language Models (Google DeepMind)

- 2024-05: Faithful Logical Reasoning via Symbolic Chain-of-Thought

- 2024-06: A Tree-of-Thoughts to Broaden Multi-step Reasoning across Languages

- 2024-09: To CoT or not to CoT? Chain-of-thought helps mainly on math and symbolic reasoning

- 2024-09: Iteration of Thought: Leveraging Inner Dialogue for Autonomous Large Language Model Reasoning (Agnostiq, Toronto)

- 2024-09: Logic-of-Thought: Injecting Logic into Contexts for Full Reasoning in Large Language Models

- 2024-10: A Theoretical Understanding of Chain-of-Thought: Coherent Reasoning and Error-Aware Demonstration (failed reasoning traces can improve CoT)

- 2024-10: Tree of Problems: Improving structured problem solving with compositionality

- 2023-01/2024-10: A Survey on In-context Learning

Naive multi-LLM (verification, majority voting, best-of-N, etc.)

- 2023-06: LLM-Blender: Ensembling Large Language Models with Pairwise Ranking and Generative Fusion (code)

- 2023-12: Dynamic Voting for Efficient Reasoning in Large Language Models

- 2024-04: Regularized Best-of-N Sampling to Mitigate Reward Hacking for Language Model Alignment

- 2024-08: Dynamic Self-Consistency: Leveraging Reasoning Paths for Efficient LLM Sampling

- 2024-11: Multi-expert Prompting Improves Reliability, Safety, and Usefulness of Large Language Models

Multi-LLM (multiple comparisons, branching, etc.)

- 2024-10: Thinking LLMs: General Instruction Following with Thought Generation

- 2024-11: Mixtures of In-Context Learners: Multiple "experts", each with a different set of in-context examples; combine outputs at the level of next-token-prediction

- 2024-11: LLaVA-o1: Let Vision Language Models Reason Step-by-Step (code)

Iteration (e.g. neural-like layered blocks)

Iterative reasoning via graphs

- 2023-08: Graph of Thoughts: Solving Elaborate Problems with Large Language Models

- 2023-10: Amortizing intractable inference in large language models (code)

- 2024-09: On the Diagram of Thought: Iterative reasoning as a directed acyclic graph (DAG)

Monte Carlo Tree Search (MCTS)

- 2024-05: AlphaMath Almost Zero: process Supervision without process

- 2024-06: ReST-MCTS*: LLM Self-Training via Process Reward Guided Tree Search

- 2024-06: Improve Mathematical Reasoning in Language Models by Automated Process Supervision

- 2024-06: Accessing GPT-4 level Mathematical Olympiad Solutions via Monte Carlo Tree Self-refine with LLaMa-3 8B

- 2024-07: Tree Search for Language Model Agents

- 2024-10: Interpretable Contrastive Monte Carlo Tree Search Reasoning

Other Search

Scaling

- 2021-04: Scaling Scaling Laws with Board Games

- 2024-03: Are More LLM Calls All You Need? Towards Scaling Laws of Compound Inference Systems

- 2024-04: The Larger the Better? Improved LLM Code-Generation via Budget Reallocation

- 2024-07: Large Language Monkeys: Scaling Inference Compute with Repeated Sampling

- 2024-08: An Empirical Analysis of Compute-Optimal Inference for Problem-Solving with Language Models

- 2024-08: Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters

- 2024-10: (comparing fine-tuning to in-context learning) Is In-Context Learning Sufficient for Instruction Following in LLMs?

Theory

Expending compute works

- 2024-06-10: Blog post (opinion): AI Search: The Bitter-er Lesson

- 2024-07-17: Blog post (test): Getting 50% (SoTA) on ARC-AGI with GPT-4o

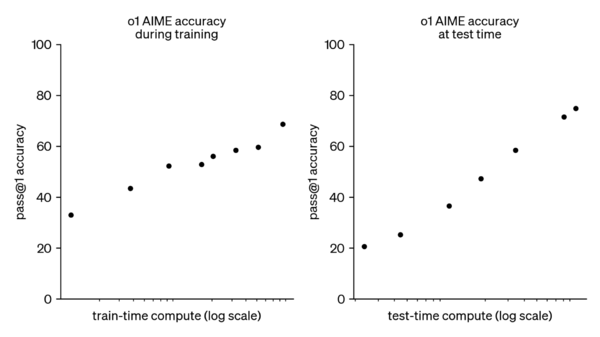

- 2024-09-12: OpenAI o1: Learning to Reason with LLMs

- 2024-09-16: Scaling: The State of Play in AI

Code for Inference-time Compute

- optillm: Inference proxy which implements state-of-the-art techniques to improve accuracy and performance of LLMs (improve reasoning over coding, logical and mathematical queries)

Memory

Tool Use

- 2024-11: DynaSaur: Large Language Agents Beyond Predefined Actions: writes functions/code to increase capabilities

Multi-agent Effort (and Emergent Intelligence)

- 2024-10: Model Swarms: Collaborative Search to Adapt LLM Experts via Swarm Intelligence

- 2024-10: Agent-as-a-Judge: Evaluate Agents with Agents

- 2024-11: Project Sid: Many-agent simulations toward AI civilization

ML-like Optimization of LLM Setup

- 2023-03: DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines (code: Programming—not prompting—Foundation Models)

- 2024-05: Automatic Prompt Optimization with "Gradient Descent" and Beam Search

- 2024-06: TextGrad: Automatic "Differentiation" via Text (gradient backpropagation through text)

- 2024-06: Symbolic Learning Enables Self-Evolving Agents (optimize LLM frameworks)

Multi-agent orchestration

Research demos

- Camel

- LoopGPT

- JARVIS

- OpenAGI

- AutoGen

- preprint: AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation

- Agent-E: Browser (eventually computer) automation (code, preprint, demo video)

- AutoGen Studio: GUI for agent workflows (code)

- Magentic-One: A Generalist Multi-Agent System for Solving Complex Tasks

- AG2 (previously AutoGen) (code, docs, Discord)

- TaskWeaver

- MetaGPT

- AutoGPT (code); and AutoGPT Platform

- Optima

- 2024-04: LLM Reasoners: New Evaluation, Library, and Analysis of Step-by-Step Reasoning with Large Language Models (code)

- 2024-06: MASAI: Modular Architecture for Software-engineering AI Agents

- 2024-10: Agent S: An Open Agentic Framework that Uses Computers Like a Human (code)

Related work

Inter-agent communications

- 2024-10: Agora: A Scalable Communication Protocol for Networks of Large Language Models (preprint): disparate agents auto-negotiate communication protocol

- 2024-11: DroidSpeak: Enhancing Cross-LLM Communication: Exploits caches of embeddings and key-values, to allow context to be more easily transferred between AIs (without consuming context window)

- 2024-11: Anthropic describes Model Context Protocol: an open standard for secure, two-way connections between data sources and AI (intro, quickstart, code)

Architectures

Open Source Frameworks

- LangChain

- ell (code, docs)

- AgentOps AI AgentStack

- Agent UI

- kyegomez swarms

- OpenAI Swarm (cookbook)

- Amazon AWS Multi-Agent Orchestrator

- KaibanJS: Kanban for AI Agents? (Takes inspiration from Kanban visual work management.)

Open Source Systems

- ControlFlow

- OpenHands (formerly OpenDevin)

- code: platform for autonomous software engineers, powered by AI and LLMs

- Report: OpenDevin: An Open Platform for AI Software Developers as Generalist Agents

Commercial Automation Frameworks

- Lutra: Automation and integration with various web systems.

- Gumloop

- TextQL: Enterprise Virtual Data Analyst

- Athena intelligence: Analytics platform

- Nexus GPT: Business co-pilot

- Multi-On: AI agent that acts on your behalf

- Firecrawl: Turn websites into LLM-ready data

- Reworkd: End-to-end data extraction

- Lindy: Custom AI Assistants to automate business workflows

- E.g. use Slack

- Bardeen: Automate workflows

- Abacus: AI Agents

- LlamaIndex: (𝕏, code, docs, Discord)

- MultiOn AI: Agent Q (paper) automated planning and execution

Spreadsheet

Cloud solutions

- Numbers Station Meadow: agentic framework for data workflows (code).

- CrewAI says they provide multi-agent automations (code).

- LangChain introduced LangGraph to help build agents, and LangGraph Cloud as a service for running those agents.

- LangGraph Studio is an IDE for agent workflows

- C3 AI enterprise platform

- Deepset AI Haystack (docs, code)

Frameworks

- Google Project Oscar

- Agent: Gaby (for "Go AI bot") (code, documentation) helps with issue tracking.

- OpenPlexity-Pages: Data-aggregator implementation (like Perplexity) based on CrewAI

Optimization

Metrics, Benchmarks

- 2022-06: PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change

- 2024-04: AutoRace (code): LLM Reasoners: New Evaluation, Library, and Analysis of Step-by-Step Reasoning with Large Language Models

- 2024-04: OSWorld: Benchmarking Multimodal Agents for Open-Ended Tasks in Real Computer Environments (github)

- 2024-07: AI Agents That Matter

- 2024-09: CORE-Bench: Fostering the Credibility of Published Research Through a Computational Reproducibility Agent Benchmark (leaderboard)

- 2024-09: LLMs Still Can't Plan; Can LRMs? A Preliminary Evaluation of OpenAI's o1 on PlanBench

- 2024-09: On The Planning Abilities of OpenAI's o1 Models: Feasibility, Optimality, and Generalizability

- 2024-10: MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering

- 2024-10: WorFBench: Benchmarking Agentic Workflow Generation

- 2024-10: VibeCheck: Discover and Quantify Qualitative Differences in Large Language Models

- 2024-10: SimpleAQ: Measuring short-form factuality in large language models (announcement, code)

- 2024-11: RE-Bench: Evaluating frontier AI R&D capabilities of language model agents against human experts (blog, code)

Agent Challenges

- Aidan-Bench: Test creativity by having a particular LLM generate long sequence of outputs (meant to be different), and measuring how long it can go before duplications appear.

- Pictionary: LLM suggests prompt, multiple LLMs generate outputs, LLM judges; allows raking of the generation abilities.

- MC-bench: Request LLMs to build an elaborate structure in Minecraft; outputs can be A/B tested by human judges.

Automated Improvement

- 2024-06: EvoAgent: Towards Automatic Multi-Agent Generation via Evolutionary Algorithms

- 2024-06: Symbolic Learning Enables Self-Evolving Agents

- 2024-08: Automated Design of Agentic Systems (ADAS code)

- 2024-08: Self-Taught Evaluators: Iterative self-improvement through generation of synthetic data and evaluation