Talk:ERI

Contents

AI Progress

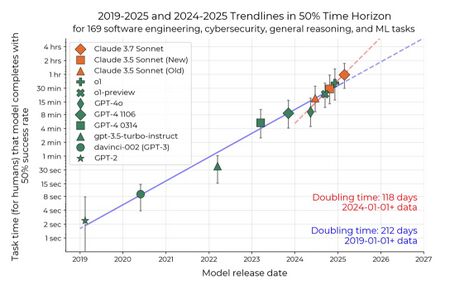

We should expect AI to improve dramatically over the next few years.

Expected capabilities (3-5 years)

- Fully automated literature/web research

- Fully automated software development (prompt-to-product)

- Arbitrary AI computer-use

- Swarms of AI agents accomplishing complex tasks

- Capable/versatile humanoid robots

- Human-level AI for tasks that take ~1 day to ~8 years for a human to complete (c.f.)

Implications for Science

- Embodied facilities (full AI control of complex physical systems)

- "Real" AI scientist, automating analysis, paper writing, etc.

- Need to learn how to manage workforce of AIs

- Huge cognitive-overhang: progress is gated by speed of physical experiments; analysis/thinking is effectively instantaneous

- Need to contend with irrelevance of existing human workforce

Future of AI for Science

Domain-specific Model Training

- Challenge: Exploit modern paradigm of foundation models, to provide generalized models for scientific understanding.

- Research:

- Foundation models pre-trained on vast datasets (as much as available). Could combine existing datasets (Internet crawl) with science-specific data.

- Solve data fusion and data interpretability.

- Connect science FMs to text-based reasoning agents: tool use, handoff sampling, ZUI, latent space reasoning, SAE alignment.

- Impact: Multi-modal models tailored to science would have immediate impact across myriad tasks (analysis, interpretation, prediction, etc.).

- Needed: Access to training data, and sufficient compute.

- Barriers: Large-scale coordination and cooperation among data stakeholders.

Embodied AI Facilities

- Challenge: Treat facility systems (endstations, sample environments, entire accelerator, etc.) as "robots" that an AI is embodied into; give the AI coherent/full view of the system (stop viewing AI/ML as a narrow accelerator for specific steps) with complete control.

- Research: Exploit advances in AI world-model-building and autonomous control (as seen in full-self-driving and robotics, for instance) as control systems for instruments/facilities.

- Impact: Massive increase in instrument utilization and efficiency, as instruments can autonomously conduct experiments, recover from failures, and make intelligent choices about what to do.

- Needed: Significant research effort in fundamentals of AI control, and significant effort for any particular problem-space to curate the required data, documentation, and constraints.

- Barriers: Risk aversion.

Agentic Ecosystem

- Challenge: Develop agentic AI assistants for science tasks (literature, data, instrument control, etc.). Develop methods to allow agents to cooperate, solving complex tasks as a group.

- Research: Frontier research in allowing LLMs to "think" (system 2 reasoning via inference-time-compute), and having agents interact (multi-agent workflows).

- Impact: Empower human scientists to tackle more complex problems. Properly coordinated, an autonomous AI swarm would act as an "exocortex" that expands human cognition and volition.

- Needed: Improvements in agentic AI. Building a wide range of science agents. Research and infrastructure to persistently run huge number of agents.

- Barriers: Inference compute costs.

Additional Considerations

Broaden Access to AI Compute

- Challenge: Many current efforts are limited by access to compute. DOE has the resources, but existing access models may need to be revisited.

- Research: Engineering challenge: to deploy premiere AI compute (GPUs, etc.) available easily to diverse community, in an "elastic" mode that scales to constantly-changing needs.

- Impact: Acceleration in research and development of AI/ML capabilities.

- Needed: Investment in compute. New infrastructure architectures tailored to making AI available.

- Barriers: Challenges traditional styles of HPC and DOE leadership computing.

Social Aspects

- Challenge: For scientific facilities to unlock the full potential of AI, several "social" and "human factors" aspects must be addressed. Facilities must start planning for the full potential impact of AI (including next-generation models that are even closer to human capabilities).

- Research: AI can help mitigate many social barriers, including:

- Exploit AI to accelerate education, training, and workforce development (of AI and non-AI subject areas)

- AI can act as ""bridge"" between researchers in different disciplines

- Exploit AI to handle heterogeneity of standards (meta-data, APIs, documentation, files, etc.)

- Exploit AI to summarize experiments, logs, etc.

- Impact: For AI to have full impact to the DOE mission, one must address social factors.

- Needed:

- Open release (data, code, publications, model weights)

- Better user interfaces to AI systems (UI/UX, HCI, etc.); e.g. for navigating complex high-D datasets, exploring networks (publications, analysis workflows)

- Barriers: Planning (in science) is lagging behind AI capabilities. There is resistance to fully embracing AI in science (conservative science planning). The pace of advancement in AI is under-appreciated (most planning assumes models will remain roughly at current capabilities for next ~5 years)

Additional Ideas

- Science Exocortex: Swarm of persistent AI agents operating on behalf of the researcher. Expands human cognition by persistently working on long-time-horizon science tasks in the background.

- Auto Standardization: The community should give up on worrying about standards for meta-data, code APIs, data/files, documentation, etc. Instead, exploit LLMs that can auto-translate between any/all structured formats.

- Auto Labeling: We should give up on users labeling their data. Instead, exploit pre-trained foundation models to automatically label data.

- AI Bridges: Empower interdisciplinary research and accelerate adoption of AI/ML, by using AIs as the "communication bridges" in collaborations.

- Embodied AI: Start building instruments and facilities as "robot bodies", where AI has access to the full set of sensors and controls.

- Sci-Entropix: Train foundation model on science (e.g. particle trajectories); use entropy-sampling to explore physics.

- Physics Interpretability: Exploit LLM interpretability research (e.g. sparse auto-encoders, SAE) to understand large/complex science datasets. I.e. train foundation models on science data, and then ""interpret"" the internals, searching for data organized along physical laws.

- Neural reconstruction: Research whether NeRF and Gaussian splatting methods can be adapted to fast reconstruction/visualization of scientific datasets. I.e. treat experimental data as points in a high-D space, with a "projective constraint" defined by how the measurements were done. Obviously this should work for 3D imaging (e.g. tomography); but it might also work for abstract high-D spaces (e.g. material properties).

- Generalized Time-casting: Exploit existing pre-trained time-series forecasting foundation models. Incorporate these into facilities, so that every "signal" is automatically forward-predicted.

- Benchmarks: Progress in AI often depends strongly on defining good metrics and benchmarks. Physical sciences are ideal, as we have clear metrics of consistency and success; DOE facilities are the right place to test/implement, as we have continual data-streams. Community should work together to define meaningful benchmarks for AI Science Agents. Some initial ideas:

- Science creativity (can be measured based on bunching in embedding space)

- Coherent understanding/generation (can be quickly assessed by humans by looking at concise outputs that require extended work; e.g. figures for scientific papers)

- Agentics (build simulators for scientific instruments, and allow AI agent to run experiments)"

- Prepare for AGI: What if, in 3-5 years, we have AIs that have almost human-level capabilities? How will this change the way we do science? We should begin seriously researching how to incorporate advanced AIs into science workflows. Begin building increasingly intelligent science AIs of a type that empowers (rather than replaces) human scientists.