Difference between revisions of "ERI"

KevinYager (talk | contribs) (→Research Thrusts) |

KevinYager (talk | contribs) (→Models) |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

==Models== | ==Models== | ||

'''How to adapt frontier methods and foundation models to science?''' | '''How to adapt frontier methods and foundation models to science?''' | ||

| + | |||

| + | [[Image:Cognitive block11.png|400px]] | ||

| + | |||

# Topical fine-tuning | # Topical fine-tuning | ||

# Tool-use | # Tool-use | ||

# Advanced retrieval-augmented generation (RAG++) | # Advanced retrieval-augmented generation (RAG++) | ||

#* '''Novel:''' Pre-generation: Agents continually add content to RAG corpus. ("Pre-thinking" across many vectors.) | #* '''Novel:''' Pre-generation: Agents continually add content to RAG corpus. ("Pre-thinking" across many vectors.) | ||

| + | #** 2025-03: [https://arxiv.org/abs/2503.18866 Reasoning to Learn from Latent Thoughts] | ||

# Science-adapted tokenization/embedding (xVal, [IDK]) | # Science-adapted tokenization/embedding (xVal, [IDK]) | ||

# Specialized sampling | # Specialized sampling | ||

#* Entropy sampling: measure uncertainty of CoT trajectories | #* Entropy sampling: measure uncertainty of CoT trajectories | ||

#* '''Novel:''' Handoff sampling: | #* '''Novel:''' Handoff sampling: | ||

| − | #** text-to-text (specialization, creativity, etc.) | + | #** Useful for: |

| − | #** text-to-tool (e.g. math) | + | #*** text-to-text (specialization, creativity, etc.) |

| − | #** test-to-field (integrate non-textual FM) | + | #*** text-to-tool (e.g. math) |

| + | #*** test-to-field (integrate non-textual FM) | ||

| + | #** Implementation: | ||

| + | #*** MI-SAE on both spaces, find matches (or maybe just "analogies"?) | ||

| + | #*** GNN, e.g.: 2019-11: [https://arxiv.org/abs/1911.11763 SuperGlue: Learning Feature Matching with Graph Neural Networks] ([https://huggingface.co/docs/transformers/main/en/model_doc/superglue hf]) | ||

| + | #*** 2025-02: [https://arxiv.org/abs/2502.03714 Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment] | ||

| + | |||

| + | '''Challenge: Connect reasoning models to domain models''' | ||

| + | # Latent space reasoning | ||

| + | # Establish mappings (analogies) between interpretability spaces | ||

| + | #* 2024-12: [https://arxiv.org/abs/2412.16325 Towards Safe and Honest AI Agents with Neural Self-Other Overlap] | ||

| + | #** 2024-07: [https://www.lesswrong.com/posts/hzt9gHpNwA2oHtwKX/self-other-overlap-a-neglected-approach-to-ai-alignment Self-Other Overlap: A Neglected Approach to AI Alignment] | ||

| + | #** 2025-03: [https://www.lesswrong.com/posts/jtqcsARGtmgogdcLT/reducing-llm-deception-at-scale-with-self-other-overlap-fine Reducing LLM deception at scale with self-other overlap fine-tuning] | ||

| + | # Cycling recaptioning/reframing | ||

| + | #* 2024-07: [https://arxiv.org/abs/2407.06723 Graph-Based Captioning: Enhancing Visual Descriptions by Interconnecting Region Captions] | ||

| + | # Tokenizer-for-science: learn right spectrum of representations (for text/image reasoning model) | ||

==Agents== | ==Agents== | ||

'''How to make AI agents smarter?''' | '''How to make AI agents smarter?''' | ||

| + | |||

| + | [[Image:Agent thinking01.png|400px]] | ||

| + | |||

# Iteration schemes (loops, blocks) | # Iteration schemes (loops, blocks) | ||

| + | ## Thinking: | ||

| + | ##* Blocky/neural: Define architecture, allow system to pick hyper-parameters | ||

## Autonomous ideation: | ## Autonomous ideation: | ||

##* '''Novel:''' Treat ideation as an AE problem in a semantic embedding space. | ##* '''Novel:''' Treat ideation as an AE problem in a semantic embedding space. | ||

| − | + | ## Dynamic tree-of-thought: on-demand context generation, allows model to select among data representations (zoom, modality, etc.) | |

| + | # Encode Human Patterns | ||

| + | ## Human scientist workflows (ideation, solving, etc.) | ||

| + | ## Thought-templates, thought-flows | ||

| + | # How to allow agents to run for long time-horizons coherently? | ||

| + | ## ''Basket of Metrics'': Need to define metrics of: (1) research success, (2) uncertainty (entropy sampling?) | ||

| + | ## Tool-use to "call human" and request help/information | ||

# Memory | # Memory | ||

| − | # | + | ## Allow system to insert and retrieve from RAG at will. |

==Exocortex== | ==Exocortex== | ||

'''What is the right architecture for AI swarms?''' | '''What is the right architecture for AI swarms?''' | ||

| + | |||

| + | [[Image:Coord schemes02.png|400px]] | ||

| + | |||

# Interaction schemes | # Interaction schemes | ||

## Test options, identify match between science task and scheme | ## Test options, identify match between science task and scheme | ||

## Treat interaction graph as ML optimization problem | ## Treat interaction graph as ML optimization problem | ||

## '''Novel:''' Map-spatial: Use a map (e.g. of BNL) to localize docs/resources/etc. | ## '''Novel:''' Map-spatial: Use a map (e.g. of BNL) to localize docs/resources/etc. | ||

| − | ## '''Novel:''' Pseudo-spatial: Use position in embedding space to localize everything | + | ## '''Novel:''' Pseudo-spatial: Use position in embedding space to localize everything. Evolving state (velocity/momentum) of agent carries information. |

| − | ## '''Novel:''' Dynamic-pseudo-spatial: Allow the space to be learned and updated | + | ## '''Novel:''' Dynamic-pseudo-spatial: Allow the space to be learned and updated; directions in embedding space can dictate information flow |

# Establish benchmarks/challenges/validations | # Establish benchmarks/challenges/validations | ||

| − | |||

==Infrastructure== | ==Infrastructure== | ||

| Line 50: | Line 82: | ||

===Human-Computer Interaction (HCI)=== | ===Human-Computer Interaction (HCI)=== | ||

'''What should the HCI be?''' | '''What should the HCI be?''' | ||

| + | ===Resources=== | ||

| + | # Need models, data, facilities, etc. all accessible as API endpoints. | ||

Latest revision as of 09:29, 26 March 2025

ERI

Contents

Research Thrusts

Models

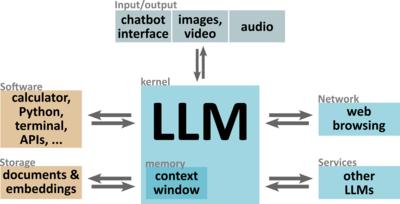

How to adapt frontier methods and foundation models to science?

- Topical fine-tuning

- Tool-use

- Advanced retrieval-augmented generation (RAG++)

- Novel: Pre-generation: Agents continually add content to RAG corpus. ("Pre-thinking" across many vectors.)

- Science-adapted tokenization/embedding (xVal, [IDK])

- Specialized sampling

- Entropy sampling: measure uncertainty of CoT trajectories

- Novel: Handoff sampling:

- Useful for:

- text-to-text (specialization, creativity, etc.)

- text-to-tool (e.g. math)

- test-to-field (integrate non-textual FM)

- Implementation:

- MI-SAE on both spaces, find matches (or maybe just "analogies"?)

- GNN, e.g.: 2019-11: SuperGlue: Learning Feature Matching with Graph Neural Networks (hf)

- 2025-02: Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment

- Useful for:

Challenge: Connect reasoning models to domain models

- Latent space reasoning

- Establish mappings (analogies) between interpretability spaces

- Cycling recaptioning/reframing

- Tokenizer-for-science: learn right spectrum of representations (for text/image reasoning model)

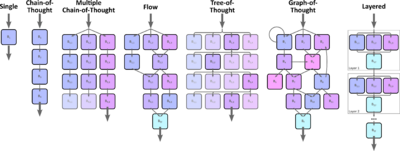

Agents

How to make AI agents smarter?

- Iteration schemes (loops, blocks)

- Thinking:

- Blocky/neural: Define architecture, allow system to pick hyper-parameters

- Autonomous ideation:

- Novel: Treat ideation as an AE problem in a semantic embedding space.

- Dynamic tree-of-thought: on-demand context generation, allows model to select among data representations (zoom, modality, etc.)

- Thinking:

- Encode Human Patterns

- Human scientist workflows (ideation, solving, etc.)

- Thought-templates, thought-flows

- How to allow agents to run for long time-horizons coherently?

- Basket of Metrics: Need to define metrics of: (1) research success, (2) uncertainty (entropy sampling?)

- Tool-use to "call human" and request help/information

- Memory

- Allow system to insert and retrieve from RAG at will.

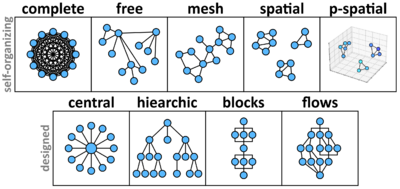

Exocortex

What is the right architecture for AI swarms?

- Interaction schemes

- Test options, identify match between science task and scheme

- Treat interaction graph as ML optimization problem

- Novel: Map-spatial: Use a map (e.g. of BNL) to localize docs/resources/etc.

- Novel: Pseudo-spatial: Use position in embedding space to localize everything. Evolving state (velocity/momentum) of agent carries information.

- Novel: Dynamic-pseudo-spatial: Allow the space to be learned and updated; directions in embedding space can dictate information flow

- Establish benchmarks/challenges/validations

Infrastructure

Architecture

What software architecture is needed?

- Code for scaffolding

- Scheme for inter-agent messaging (plain English w/ pointers, etc.)

- Data management

Hardware

How to implement inference-time compute for exocortex?

- Heterogeneous hardware

- Elastic (combine local & cloud)

- Workflow management

Human-Computer Interaction (HCI)

What should the HCI be?

Resources

- Need models, data, facilities, etc. all accessible as API endpoints.