Difference between revisions of "AI understanding"

KevinYager (talk | contribs) (→Other) |

KevinYager (talk | contribs) (→Interpretability) |

||

| (20 intermediate revisions by the same user not shown) | |||

| Line 11: | Line 11: | ||

* 2025-01: [https://arxiv.org/abs/2501.14926 Interpretability in Parameter Space: Minimizing Mechanistic Description Length with Attribution-based Parameter Decomposition] ([https://www.alignmentforum.org/posts/EPefYWjuHNcNH4C7E/attribution-based-parameter-decomposition blog post]) | * 2025-01: [https://arxiv.org/abs/2501.14926 Interpretability in Parameter Space: Minimizing Mechanistic Description Length with Attribution-based Parameter Decomposition] ([https://www.alignmentforum.org/posts/EPefYWjuHNcNH4C7E/attribution-based-parameter-decomposition blog post]) | ||

* 2025-01: Review: [https://arxiv.org/abs/2501.16496 Open Problems in Mechanistic Interpretability] | * 2025-01: Review: [https://arxiv.org/abs/2501.16496 Open Problems in Mechanistic Interpretability] | ||

| + | * 2025-03: Anthropic: [https://www.anthropic.com/research/tracing-thoughts-language-model Tracing the thoughts of a large language model] | ||

| + | ** [https://transformer-circuits.pub/2025/attribution-graphs/methods.html Circuit Tracing: Revealing Computational Graphs in Language Models] | ||

| + | ** [https://transformer-circuits.pub/2025/attribution-graphs/biology.html On the Biology of a Large Language Model] | ||

==Semanticity== | ==Semanticity== | ||

| Line 22: | Line 25: | ||

* 2024-10: [https://arxiv.org/abs/2410.14670 Decomposing The Dark Matter of Sparse Autoencoders] ([https://github.com/JoshEngels/SAE-Dark-Matter code]) Shows that SAE errors are predictable | * 2024-10: [https://arxiv.org/abs/2410.14670 Decomposing The Dark Matter of Sparse Autoencoders] ([https://github.com/JoshEngels/SAE-Dark-Matter code]) Shows that SAE errors are predictable | ||

* 2024-10: [https://arxiv.org/abs/2410.13928 Automatically Interpreting Millions of Features in Large Language Models] | * 2024-10: [https://arxiv.org/abs/2410.13928 Automatically Interpreting Millions of Features in Large Language Models] | ||

| + | * 2024-10: [https://arxiv.org/abs/2410.21331 Beyond Interpretability: The Gains of Feature Monosemanticity on Model Robustness] | ||

* 2024-12: [https://arxiv.org/abs/2412.04139 Monet: Mixture of Monosemantic Experts for Transformers] | * 2024-12: [https://arxiv.org/abs/2412.04139 Monet: Mixture of Monosemantic Experts for Transformers] | ||

* 2024-12: [https://www.lesswrong.com/posts/zbebxYCqsryPALh8C/matryoshka-sparse-autoencoders Matryoshka Sparse Autoencoders] | * 2024-12: [https://www.lesswrong.com/posts/zbebxYCqsryPALh8C/matryoshka-sparse-autoencoders Matryoshka Sparse Autoencoders] | ||

| Line 27: | Line 31: | ||

* 2025-01: [https://arxiv.org/abs/2501.19406 Low-Rank Adapting Models for Sparse Autoencoders] | * 2025-01: [https://arxiv.org/abs/2501.19406 Low-Rank Adapting Models for Sparse Autoencoders] | ||

* 2025-02: [https://arxiv.org/abs/2502.03714 Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment] | * 2025-02: [https://arxiv.org/abs/2502.03714 Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment] | ||

| + | * 2025-02: [https://arxiv.org/abs/2502.06755 Sparse Autoencoders for Scientifically Rigorous Interpretation of Vision Models] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.00177 Steering Large Language Model Activations in Sparse Spaces] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.01776 Beyond Matryoshka: Revisiting Sparse Coding for Adaptive Representation] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.01824 From superposition to sparse codes: interpretable representations in neural networks] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.18878 I Have Covered All the Bases Here: Interpreting Reasoning Features in Large Language Models via Sparse Autoencoders] | ||

===Counter-Results=== | ===Counter-Results=== | ||

| Line 34: | Line 43: | ||

* 2025-01: [https://arxiv.org/abs/2501.17727 Sparse Autoencoders Can Interpret Randomly Initialized Transformers] | * 2025-01: [https://arxiv.org/abs/2501.17727 Sparse Autoencoders Can Interpret Randomly Initialized Transformers] | ||

* 2025-02: [https://arxiv.org/abs/2502.04878 Sparse Autoencoders Do Not Find Canonical Units of Analysis] | * 2025-02: [https://arxiv.org/abs/2502.04878 Sparse Autoencoders Do Not Find Canonical Units of Analysis] | ||

| + | * 2025-03: [https://www.alignmentforum.org/posts/4uXCAJNuPKtKBsi28/ Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research] | ||

| + | |||

| + | ==Coding Models== | ||

| + | * '''Sparse Auto Encoders''': See Semanticity. | ||

| + | * [https://github.com/saprmarks/dictionary_learning dictionary_learning] | ||

| + | * [https://transformer-circuits.pub/2024/jan-update/index.html#predict-future Predicting Future Activations] | ||

| + | * 2024-06: [https://arxiv.org/abs/2406.11944 Transcoders Find Interpretable LLM Feature Circuits] | ||

| + | * 2024-10: [https://transformer-circuits.pub/2024/crosscoders/index.html Sparse Crosscoders for Cross-Layer Features and Model Diffing] | ||

==Reward Functions== | ==Reward Functions== | ||

| Line 152: | Line 169: | ||

* 2024-06: [https://arxiv.org/abs/2406.11717 Refusal in Language Models Is Mediated by a Single Direction] | * 2024-06: [https://arxiv.org/abs/2406.11717 Refusal in Language Models Is Mediated by a Single Direction] | ||

* 2025-02: [https://martins1612.github.io/emergent_misalignment_betley.pdf Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs] ([https://x.com/OwainEvans_UK/status/1894436637054214509 demonstrates] [https://x.com/ESYudkowsky/status/1894453376215388644 entangling] of concepts into a single preference vector) | * 2025-02: [https://martins1612.github.io/emergent_misalignment_betley.pdf Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs] ([https://x.com/OwainEvans_UK/status/1894436637054214509 demonstrates] [https://x.com/ESYudkowsky/status/1894453376215388644 entangling] of concepts into a single preference vector) | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.03666 Analogical Reasoning Inside Large Language Models: Concept Vectors and the Limits of Abstraction] | ||

==Other== | ==Other== | ||

| Line 169: | Line 187: | ||

* 2021-02: [https://arxiv.org/abs/2102.06701 Explaining Neural Scaling Laws] (Google DeepMind) | * 2021-02: [https://arxiv.org/abs/2102.06701 Explaining Neural Scaling Laws] (Google DeepMind) | ||

* 2022-03: [https://arxiv.org/abs/2203.15556 Training Compute-Optimal Large Language Models] (Chinchilla, Google DeepMind) | * 2022-03: [https://arxiv.org/abs/2203.15556 Training Compute-Optimal Large Language Models] (Chinchilla, Google DeepMind) | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.04715 Predictable Scale: Part I -- Optimal Hyperparameter Scaling Law in Large Language Model Pretraining] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.10061 Compute Optimal Scaling of Skills: Knowledge vs Reasoning] | ||

=Information Processing/Storage= | =Information Processing/Storage= | ||

| + | * 2020-02: [https://arxiv.org/abs/2002.10689 A Theory of Usable Information Under Computational Constraints] | ||

* "A transformer's depth affects its reasoning capabilities, whilst model size affects its knowledge capacity" ([https://x.com/danielhanchen/status/1835684061475655967 c.f.]) | * "A transformer's depth affects its reasoning capabilities, whilst model size affects its knowledge capacity" ([https://x.com/danielhanchen/status/1835684061475655967 c.f.]) | ||

** 2024-02: [https://arxiv.org/abs/2402.14905 MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases] | ** 2024-02: [https://arxiv.org/abs/2402.14905 MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases] | ||

| Line 178: | Line 199: | ||

* 2024-10: [https://arxiv.org/abs/2407.01687 Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning]. CoT involves both memorization and (probabilitic) reasoning | * 2024-10: [https://arxiv.org/abs/2407.01687 Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning]. CoT involves both memorization and (probabilitic) reasoning | ||

* 2024-11: [https://arxiv.org/abs/2411.16679 Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts?] | * 2024-11: [https://arxiv.org/abs/2411.16679 Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts?] | ||

| + | * 2025-03: [https://www.arxiv.org/abs/2503.03961 A Little Depth Goes a Long Way: The Expressive Power of Log-Depth Transformers] | ||

==Tokenization== | ==Tokenization== | ||

| Line 187: | Line 209: | ||

* 2024-12: [https://arxiv.org/abs/2412.11521 On the Ability of Deep Networks to Learn Symmetries from Data: A Neural Kernel Theory] | * 2024-12: [https://arxiv.org/abs/2412.11521 On the Ability of Deep Networks to Learn Symmetries from Data: A Neural Kernel Theory] | ||

* 2025-01: [https://arxiv.org/abs/2501.12391 Physics of Skill Learning] | * 2025-01: [https://arxiv.org/abs/2501.12391 Physics of Skill Learning] | ||

| + | |||

| + | ===Cross-modal knowledge transfer=== | ||

| + | * 2022-03: [https://arxiv.org/abs/2203.07519 Leveraging Visual Knowledge in Language Tasks: An Empirical Study on Intermediate Pre-training for Cross-modal Knowledge Transfer] | ||

| + | * 2023-05: [https://arxiv.org/abs/2305.07358 Towards Versatile and Efficient Visual Knowledge Integration into Pre-trained Language Models with Cross-Modal Adapters] | ||

| + | * 2025-02: [https://arxiv.org/abs/2502.06755 Sparse Autoencoders for Scientifically Rigorous Interpretation of Vision Models]: CLIP learns richer set of aggregated representations (e.g. for a culture or country), vs. a vision-only model. | ||

==Hidden State== | ==Hidden State== | ||

* 2025-02: [https://arxiv.org/abs/2502.06258 Emergent Response Planning in LLM]: They show that the latent representation contains information beyond that needed for the next token (i.e. the model learns to "plan ahead" and encode information relevant to future tokens) | * 2025-02: [https://arxiv.org/abs/2502.06258 Emergent Response Planning in LLM]: They show that the latent representation contains information beyond that needed for the next token (i.e. the model learns to "plan ahead" and encode information relevant to future tokens) | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.02854 (How) Do Language Models Track State?] | ||

| + | |||

| + | ==Function Approximation== | ||

| + | * 2022-08: [https://arxiv.org/abs/2208.01066 What Can Transformers Learn In-Context? A Case Study of Simple Function Classes]: can learn linear functions (equivalent to least-squares estimator) | ||

| + | * 2022-11: [https://arxiv.org/abs/2211.09066 Teaching Algorithmic Reasoning via In-context Learning]: Simple arithmetic | ||

| + | * 2022-11: [https://arxiv.org/abs/2211.15661 What learning algorithm is in-context learning? Investigations with linear models] ([https://github.com/ekinakyurek/google-research/tree/master/incontext code]): can learn linear regression | ||

| + | * 2022-12: [https://arxiv.org/abs/2212.07677 Transformers learn in-context by gradient descent] | ||

| + | * 2023-06: [https://arxiv.org/abs/2306.00297 Transformers learn to implement preconditioned gradient descent for in-context learning] | ||

| + | * 2023-07: [https://arxiv.org/abs/2307.03576 One Step of Gradient Descent is Provably the Optimal In-Context Learner with One Layer of Linear Self-Attention] | ||

| + | * 2024-04: [https://arxiv.org/abs/2404.02893 ChatGLM-Math: Improving Math Problem-Solving in Large Language Models with a Self-Critique Pipeline] | ||

| + | * 2025-02: [https://arxiv.org/abs/2502.20545 SoS1: O1 and R1-Like Reasoning LLMs are Sum-of-Square Solvers] | ||

| + | * 2025-02: [https://arxiv.org/abs/2502.21212 Transformers Learn to Implement Multi-step Gradient Descent with Chain of Thought] | ||

=Failure Modes= | =Failure Modes= | ||

| Line 196: | Line 235: | ||

* 2023-09: [https://arxiv.org/abs/2309.13638 Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve] (biases towards "common" numbers, in-context CoT can reduce performance by incorrectly priming, etc.) | * 2023-09: [https://arxiv.org/abs/2309.13638 Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve] (biases towards "common" numbers, in-context CoT can reduce performance by incorrectly priming, etc.) | ||

* 2023-11: [https://arxiv.org/abs/2311.16093 Visual cognition in multimodal large language models] (models lack human-like visual understanding) | * 2023-11: [https://arxiv.org/abs/2311.16093 Visual cognition in multimodal large language models] (models lack human-like visual understanding) | ||

| + | |||

| + | ==Jagged Frontier== | ||

| + | * 2024-07: [https://arxiv.org/abs/2407.03211 How Does Quantization Affect Multilingual LLMs?]: Quantization degrades different languages by differing amounts | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.10061v1 Compute Optimal Scaling of Skills: Knowledge vs Reasoning]: Scaling laws are skill-dependent | ||

=Psychology= | =Psychology= | ||

Latest revision as of 14:41, 31 March 2025

Contents

Interpretability

- 2017-01: Learning to Generate Reviews and Discovering Sentiment

- 2025-02: Neural Interpretable Reasoning

Mechanistic Interpretability

- 2020-03: OpenAI: Zoom In: An Introduction to Circuits

- 2021-12: Anthropic: A Mathematical Framework for Transformer Circuits

- 2022-09: Interpretability in the Wild: a Circuit for Indirect Object Identification in GPT-2 Small

- 2023-01: Tracr: Compiled Transformers as a Laboratory for Interpretability (code)

- 2024-07: Anthropic: Circuits Update

- 2025-01: Interpretability in Parameter Space: Minimizing Mechanistic Description Length with Attribution-based Parameter Decomposition (blog post)

- 2025-01: Review: Open Problems in Mechanistic Interpretability

- 2025-03: Anthropic: Tracing the thoughts of a large language model

Semanticity

- 2023-09: Sparse Autoencoders Find Highly Interpretable Features in Language Models

- Anthropic monosemanticity interpretation of LLM features:

- 2024-06: OpenaAI: Scaling and evaluating sparse autoencoders

- 2024-08: Showing SAE Latents Are Not Atomic Using Meta-SAEs (demo)

- 2024-10: Efficient Dictionary Learning with Switch Sparse Autoencoders (code) More efficient SAE generation

- 2024-10: Decomposing The Dark Matter of Sparse Autoencoders (code) Shows that SAE errors are predictable

- 2024-10: Automatically Interpreting Millions of Features in Large Language Models

- 2024-10: Beyond Interpretability: The Gains of Feature Monosemanticity on Model Robustness

- 2024-12: Monet: Mixture of Monosemantic Experts for Transformers

- 2024-12: Matryoshka Sparse Autoencoders

- 2024-12: Learning Multi-Level Features with Matryoshka SAEs

- 2025-01: Low-Rank Adapting Models for Sparse Autoencoders

- 2025-02: Universal Sparse Autoencoders: Interpretable Cross-Model Concept Alignment

- 2025-02: Sparse Autoencoders for Scientifically Rigorous Interpretation of Vision Models

- 2025-03: Steering Large Language Model Activations in Sparse Spaces

- 2025-03: Beyond Matryoshka: Revisiting Sparse Coding for Adaptive Representation

- 2025-03: From superposition to sparse codes: interpretable representations in neural networks

- 2025-03: I Have Covered All the Bases Here: Interpreting Reasoning Features in Large Language Models via Sparse Autoencoders

Counter-Results

- 2020-10: Towards falsifiable interpretability research

- 2025-01: Sparse Autoencoders Trained on the Same Data Learn Different Features

- 2025-01: AxBench: Steering LLMs? Even Simple Baselines Outperform Sparse Autoencoders

- 2025-01: Sparse Autoencoders Can Interpret Randomly Initialized Transformers

- 2025-02: Sparse Autoencoders Do Not Find Canonical Units of Analysis

- 2025-03: Negative Results for SAEs On Downstream Tasks and Deprioritising SAE Research

Coding Models

- Sparse Auto Encoders: See Semanticity.

- dictionary_learning

- Predicting Future Activations

- 2024-06: Transcoders Find Interpretable LLM Feature Circuits

- 2024-10: Sparse Crosscoders for Cross-Layer Features and Model Diffing

Reward Functions

Symbolic and Notation

- A Mathematical Framework for Transformer Circuits

- Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structures

- 2024-07: On the Anatomy of Attention: Introduces category-theoretic diagrammatic formalism for DL architectures

- 2024-11: diagrams to represent algorithms

- 2024-12: FlashAttention on a Napkin: A Diagrammatic Approach to Deep Learning IO-Awareness

Mathematical

Geometric

- 2023-11: The Linear Representation Hypothesis and the Geometry of Large Language Models

- 2024-06: The Geometry of Categorical and Hierarchical Concepts in Large Language Models

- Natural hierarchies of concepts---which occur throughout natural language and especially in scientific ontologies---are represented in the model's internal vectorial space as polytopes that can be decomposed into simplexes of mutually-exclusive categories.

- 2024-07: Reasoning in Large Language Models: A Geometric Perspective

- 2024-09: Deep Manifold Part 1: Anatomy of Neural Network Manifold

- 2024-10: The Geometry of Concepts: Sparse Autoencoder Feature Structure

- Tegmark et al. report multi-scale structure: 1) “atomic” small-scale, 2) “brain” intermediate-scale, and 3) “galaxy” large-scale

- 2025-02: The Geometry of Prompting: Unveiling Distinct Mechanisms of Task Adaptation in Language Models

Topography

Challenges

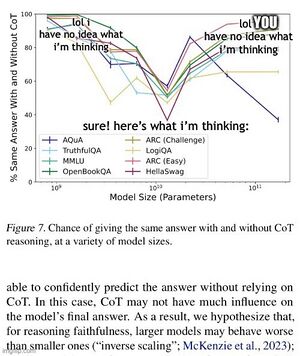

- 2023-07Jul: Measuring Faithfulness in Chain-of-Thought Reasoning roughly proves that sufficiently large models do not generate CoT that actually captures their internal reasoning)

Heuristic Understanding

Emergent Internal Model Building

- 2023-07: A Theory for Emergence of Complex Skills in Language Models

- 2024-06: Emergence of Hidden Capabilities: Exploring Learning Dynamics in Concept Space

Semantic Directions

Directions, e.g.: f(king)-f(man)+f(woman)=f(queen) or f(sushi)-f(Japan)+f(Italy)=f(pizza)

- Efficient Estimation of Word Representations in Vector Space

- Linguistic Regularities in Continuous Space Word Representations

- Word Embeddings, Analogies, and Machine Learning: Beyond king - man + woman = queen

- Glove: Global vectors for word representation

- Using Word2Vec to process big text data

- The geometry of truth: Emergent linear structure in large language model representations of true/false datasets (true/false)

- Monotonic Representation of Numeric Properties in Language Models (numeric directions)

Task vectors:

- Function Vectors in Large Language Models

- In-context learning creates task vectors

- Extracting sae task features for in-context learning

- Emergence of Abstractions: Concept Encoding and Decoding Mechanism for In-Context Learning in Transformers

Feature Geometry Reproduces Problem-space

- Emergent World Representations: Exploring a Sequence Model Trained on a Synthetic Task (Othello)

- Emergent linear representations in world models of self-supervised sequence models (Othello)

- What learning algorithm is in-context learning? Investigations with linear models

- Emergent analogical reasoning in large language models

- Language Models Represent Space and Time (Maps of world, US)

- Not All Language Model Features Are Linear (Days of week form ring, etc.)

- Evaluating the World Model Implicit in a Generative Model (Map of Manhattan)

- Reliable precipitation nowcasting using probabilistic diffusion models. Generation of precipitation map imagery is predictive of actual future weather; implies model is learning scientifically-relevant modeling.

- The Platonic Representation Hypothesis: Different models (including across modalities) are converging to a consistent world model.

- ICLR: In-Context Learning of Representations

- Language Models Use Trigonometry to Do Addition: Numbers arranged in helix to enable addition

Capturing Physics

- 2020-09: Learning to Identify Physical Parameters from Video Using Differentiable Physics

- 2022-07: Self-Supervised Learning for Videos: A Survey

- 2025-02: Fair at Meta: Intuitive physics understanding emerges from self-supervised pretraining on natural videos

Theory of Mind

- Evaluating Large Language Models in Theory of Mind Tasks

- Looking Inward: Language Models Can Learn About Themselves by Introspection

- Tell me about yourself: LLMs are aware of their learned behaviors

Skeptical

Information Processing

- 2021-03: Pretrained Transformers as Universal Computation Engines

- 2023-04: Why think step by step? Reasoning emerges from the locality of experience

- 2023-10: What's the Magic Word? A Control Theory of LLM Prompting

- 2024-02: Chain of Thought Empowers Transformers to Solve Inherently Serial Problems: Proves that transformers can solve any problem, if they can generate sufficient intermediate tokens

- 2024-07: Physics of Language Models: Part 2.1, Grade-School Math and the Hidden Reasoning Process

- Models learning reasoning skills (they are not merely memorizing solution templates). They can mentally generate simple short plans (like humans).

- When presented facts, models develop internal understanding of what parameters (recursively) depend on each other. This occurs even before an explicit question is asked (i.e. before the task is defined). This appears to be different from human reasoning.

- Model depth matters for reasoning. This cannot be mitigated by chain-of-thought prompting (which allow models to develop and then execute plans) since even a single CoT step may require deep, multi-step reasoning/planning.

- 2024-11: Ask, and it shall be given: Turing completeness of prompting

Generalization

- 2024-06: Connecting the Dots: LLMs can Infer and Verbalize Latent Structure from Disparate Training Data

Grokking

- 2022-01: Grokking: Generalization Beyond Overfitting on Small Algorithmic Datasets

- 2022-05: Towards Understanding Grokking: An Effective Theory of Representation Learning

- 2024-01: Critical Data Size of Language Models from a Grokking Perspective

- 2024-02: Unified View of Grokking, Double Descent and Emergent Abilities: A Perspective from Circuits Competition

- 2024-12: How to explain grokking

Tests of Resilience to Dropouts/etc.

- 2024-02: Explorations of Self-Repair in Language Models

- 2024-06: What Matters in Transformers? Not All Attention is Needed

- Removing entire transformer blocks leads to significant performance degradation

- Removing MLP layers results in significant performance degradation

- Removing attention layers causes almost no performance degradation

- E.g. half of attention layers are deleted (48% speed-up), leads to only 2.4% decrease in the benchmarks

- 2024-06: The Remarkable Robustness of LLMs: Stages of Inference?

- They intentionally break the network (swapping layers), yet it continues to work remarkably well. This suggests LLMs are quite robust, and allows them to identify different stages in processing.

- They also use these interventions to infer what different layers are doing. They break apart the LLM transformer layers into four stages:

- Detokenization: Raw tokens are converted into meaningful entities that take into account local context (especially using nearby tokens).

- Feature engineering: Features are progressively refined. Factual knowledge is leveraged.

- Prediction ensembling: Predictions (for the ultimately-selected next-token) emerge. A sort of consensus voting is used, with “prediction neurons” and "suppression neurons" playing a major role in upvoting/downvoting.

- Residual sharpening: The semantic representations are collapsed into specific next-token predictions. There is a strong emphasis on suppression neurons eliminating options. The confidence is calibrated.

- This structure can be thought of as two halves (being roughly dual to each other): the first half broadens (goes from distinct tokens to a rich/elaborate concept-space) and the second half collapses (goes from rich concepts to concrete token predictions).

Semantic Vectors

- 2024-06: Refusal in Language Models Is Mediated by a Single Direction

- 2025-02: Emergent Misalignment: Narrow finetuning can produce broadly misaligned LLMs (demonstrates entangling of concepts into a single preference vector)

- 2025-03: Analogical Reasoning Inside Large Language Models: Concept Vectors and the Limits of Abstraction

Other

- 2024-11: Deep Learning Through A Telescoping Lens: A Simple Model Provides Empirical Insights On Grokking, Gradient Boosting & Beyond

- 2024-11: Language Models are Hidden Reasoners: Unlocking Latent Reasoning Capabilities via Self-Rewarding (code)

- 2024-11: Procedural Knowledge in Pretraining Drives Reasoning in Large Language Models: LLMs learn reasoning by extracting procedures from training data, not by memorizing specific answers

- 2024-11: LLMs Do Not Think Step-by-step In Implicit Reasoning

- 2024-12: The Complexity Dynamics of Grokking

Scaling Laws

- 2017-12: Deep Learning Scaling is Predictable, Empirically (Baidu)

- 2019-03: The Bitter Lesson (Rich Sutton)

- 2020-01: Scaling Laws for Neural Language Models (OpenAI)

- 2020-10: Scaling Laws for Autoregressive Generative Modeling (OpenAI)

- 2020-05: The Scaling Hypothesis (Gwern)

- 2021-08: Scaling Laws for Deep Learning

- 2021-02: Explaining Neural Scaling Laws (Google DeepMind)

- 2022-03: Training Compute-Optimal Large Language Models (Chinchilla, Google DeepMind)

- 2025-03: Predictable Scale: Part I -- Optimal Hyperparameter Scaling Law in Large Language Model Pretraining

- 2025-03: Compute Optimal Scaling of Skills: Knowledge vs Reasoning

Information Processing/Storage

- 2020-02: A Theory of Usable Information Under Computational Constraints

- "A transformer's depth affects its reasoning capabilities, whilst model size affects its knowledge capacity" (c.f.)

- 2024-02: MobileLLM: Optimizing Sub-billion Parameter Language Models for On-Device Use Cases

- 2024-04: The Illusion of State in State-Space Models (figure 3)

- 2024-08: Gemma 2: Improving Open Language Models at a Practical Size (table 9)

- 2024-09: Schrodinger's Memory: Large Language Models

- 2024-10: Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning. CoT involves both memorization and (probabilitic) reasoning

- 2024-11: Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts?

- 2025-03: A Little Depth Goes a Long Way: The Expressive Power of Log-Depth Transformers

Tokenization

For numbers/math

- 2024-02: Tokenization counts: the impact of tokenization on arithmetic in frontier LLMs: L2R vs. R2L yields different performance on math

Learning/Training

- 2018-03: The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks: Sparse neural networks are optimal, but it is difficult to identify the right architecture and train it. Deep learning typically consists of training a dense neural network, which makes it easier to learn an internal sparse circuit optimal to a particular problem.

- 2024-12: On the Ability of Deep Networks to Learn Symmetries from Data: A Neural Kernel Theory

- 2025-01: Physics of Skill Learning

Cross-modal knowledge transfer

- 2022-03: Leveraging Visual Knowledge in Language Tasks: An Empirical Study on Intermediate Pre-training for Cross-modal Knowledge Transfer

- 2023-05: Towards Versatile and Efficient Visual Knowledge Integration into Pre-trained Language Models with Cross-Modal Adapters

- 2025-02: Sparse Autoencoders for Scientifically Rigorous Interpretation of Vision Models: CLIP learns richer set of aggregated representations (e.g. for a culture or country), vs. a vision-only model.

Hidden State

- 2025-02: Emergent Response Planning in LLM: They show that the latent representation contains information beyond that needed for the next token (i.e. the model learns to "plan ahead" and encode information relevant to future tokens)

- 2025-03: (How) Do Language Models Track State?

Function Approximation

- 2022-08: What Can Transformers Learn In-Context? A Case Study of Simple Function Classes: can learn linear functions (equivalent to least-squares estimator)

- 2022-11: Teaching Algorithmic Reasoning via In-context Learning: Simple arithmetic

- 2022-11: What learning algorithm is in-context learning? Investigations with linear models (code): can learn linear regression

- 2022-12: Transformers learn in-context by gradient descent

- 2023-06: Transformers learn to implement preconditioned gradient descent for in-context learning

- 2023-07: One Step of Gradient Descent is Provably the Optimal In-Context Learner with One Layer of Linear Self-Attention

- 2024-04: ChatGLM-Math: Improving Math Problem-Solving in Large Language Models with a Self-Critique Pipeline

- 2025-02: SoS1: O1 and R1-Like Reasoning LLMs are Sum-of-Square Solvers

- 2025-02: Transformers Learn to Implement Multi-step Gradient Descent with Chain of Thought

Failure Modes

- 2023-06: Can Large Language Models Infer Causation from Correlation?: Poor causal inference

- 2023-09: The Reversal Curse: LLMs trained on "A is B" fail to learn "B is A"

- 2023-09: Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve (biases towards "common" numbers, in-context CoT can reduce performance by incorrectly priming, etc.)

- 2023-11: Visual cognition in multimodal large language models (models lack human-like visual understanding)

Jagged Frontier

- 2024-07: How Does Quantization Affect Multilingual LLMs?: Quantization degrades different languages by differing amounts

- 2025-03: Compute Optimal Scaling of Skills: Knowledge vs Reasoning: Scaling laws are skill-dependent

Psychology

Allow LLM to think

In-context Learning

- 2021-10: MetaICL: Learning to Learn In Context

- 2022-02: Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?

- 2022-08: What Can Transformers Learn In-Context? A Case Study of Simple Function Classes

- 2022-11: What learning algorithm is in-context learning? Investigations with linear models

- 2022-12: Transformers learn in-context by gradient descent

Reasoning (CoT, etc.)

- 2025-01: Large Language Models Think Too Fast To Explore Effectively

- 2025-01: Thoughts Are All Over the Place: On the Underthinking of o1-Like LLMs

- 2025-01: Are DeepSeek R1 And Other Reasoning Models More Faithful?: reasoning models can provide faithful explanations for why their reasoning is correct