Difference between revisions of "AI benchmarks"

KevinYager (talk | contribs) (→Software/Coding) |

KevinYager (talk | contribs) (→Hallucination) |

||

| (35 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | = | + | =General= |

| − | * [https:// | + | * [https://lifearchitect.ai/models-table/ Models Table] (lifearchitect.ai) |

| − | * [https:// | + | * [https://artificialanalysis.ai/models Artificial Analysis] |

| − | * [https:// | + | * [https://epoch.ai/ Epoch AI] |

| − | * [https:// | + | ** [https://epoch.ai/data/notable-ai-models Notable AI models] |

| − | * [https:// | + | ** [https://epoch.ai/data/ai-benchmarking-dashboard AI benchmarking dashboard] |

| − | * [https:// | + | |

| + | ==Lists of Benchmarks== | ||

| + | * 2025-05: [https://x.com/scaling01 Lisan al Gaib]: [https://x.com/scaling01/status/1919092778648408363 The Ultimate LLM Benchmark list] | ||

| + | ** [https://x.com/scaling01/status/1919217718420508782 Average across 28 benchmarks] | ||

| + | |||

| + | ==Analysis of Methods== | ||

| + | * 2025-04: [https://arxiv.org/abs/2504.20879 The Leaderboard Illusion] | ||

=Methods= | =Methods= | ||

* [https://openreview.net/pdf?id=fz969ahcvJ AidanBench: Evaluating Novel Idea Generation on Open-Ended Questions] ([https://github.com/aidanmclaughlin/AidanBench code]) | * [https://openreview.net/pdf?id=fz969ahcvJ AidanBench: Evaluating Novel Idea Generation on Open-Ended Questions] ([https://github.com/aidanmclaughlin/AidanBench code]) | ||

| + | ** [https://aidanbench.com/ Leaderboard] | ||

| + | ** [https://x.com/scaling01/status/1897301054431064391 Suggestion to use] [https://en.wikipedia.org/wiki/Borda_count Borda count] | ||

| + | ** 2025-04: [https://x.com/scaling01/status/1910499781601874008 add] Quasar Alpha, Optimus Alpha, Llama-4 Scout and Llama-4 Maverick | ||

* [https://arxiv.org/abs/2502.01100 ZebraLogic: On the Scaling Limits of LLMs for Logical Reasoning]. Assess reasoning using puzzles of tunable complexity. | * [https://arxiv.org/abs/2502.01100 ZebraLogic: On the Scaling Limits of LLMs for Logical Reasoning]. Assess reasoning using puzzles of tunable complexity. | ||

| + | * 2025-08: [https://arxiv.org/abs/2508.12790 Reinforcement Learning with Rubric Anchors] ([https://huggingface.co/inclusionAI/Rubicon-Preview model]) | ||

| + | |||

| + | ==Task Length== | ||

| + | * 2020-09: Ajeya Cotra: [https://www.lesswrong.com/posts/KrJfoZzpSDpnrv9va/draft-report-on-ai-timelines Draft report on AI timelines] | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.14499 Measuring AI Ability to Complete Long Tasks Measuring AI Ability to Complete Long Tasks] | ||

| + | [[Image:GmZHL8xWQAAtFlF.jpeg|450px]] | ||

=Assess Specific Attributes= | =Assess Specific Attributes= | ||

| + | ==Various== | ||

| + | * [https://lmsys.org/ LMSYS]: Human preference ranking leaderboard | ||

| + | * [https://trackingai.org/home Tracking AI]: "IQ" leaderboard | ||

| + | * [https://livebench.ai/#/ LiveBench: A Challenging, Contamination-Free LLM Benchmark] | ||

| + | * [https://github.com/lechmazur/generalization/ LLM Thematic Generalization Benchmark] | ||

| + | |||

| + | ==Hallucination== | ||

| + | * [https://www.vectara.com/ Vectara] [https://github.com/vectara/hallucination-leaderboard Hallucination Leaderboard] | ||

| + | * [https://github.com/lechmazur/confabulations/ LLM Confabulation (Hallucination) Leaderboard for RAG] | ||

| + | * 2025-09: OpenAI: [https://cdn.openai.com/pdf/d04913be-3f6f-4d2b-b283-ff432ef4aaa5/why-language-models-hallucinate.pdf Why Language Models Hallucinate] | ||

| + | |||

==Software/Coding== | ==Software/Coding== | ||

* 2025-02: [https://arxiv.org/abs/2502.12115SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?] ([https://github.com/openai/SWELancer-Benchmark code]) | * 2025-02: [https://arxiv.org/abs/2502.12115SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?] ([https://github.com/openai/SWELancer-Benchmark code]) | ||

| + | |||

| + | ==Math== | ||

| + | * [https://www.vals.ai/benchmarks/aime-2025-03-13 AIME Benchmark] | ||

| + | |||

| + | ==Science== | ||

| + | * 2025-07: [https://allenai.org/blog/sciarena SciArena: A New Platform for Evaluating Foundation Models in Scientific Literature Tasks] ([https://sciarena.allen.ai/ vote], [https://huggingface.co/datasets/yale-nlp/SciArena data], [https://github.com/yale-nlp/SciArena code]) | ||

| + | |||

| + | ==Visual== | ||

| + | * 2024-06: [https://charxiv.github.io/ Charting Gaps in Realistic Chart Understanding in Multimodal LLMs] ([https://arxiv.org/abs/2406.18521 preprint], [https://charxiv.github.io/ leaderboard]) | ||

| + | * 2025-03: [https://arxiv.org/abs/2503.14607 Can Large Vision Language Models Read Maps Like a Human?] MapBench | ||

| + | |||

| + | ==Conversation== | ||

| + | * 2025-01: [https://arxiv.org/abs/2501.17399 MultiChallenge: A Realistic Multi-Turn Conversation Evaluation Benchmark Challenging to Frontier LLMs] ([https://scale.com/research/multichallenge project], [https://github.com/ekwinox117/multi-challenge code], [https://scale.com/leaderboard/multichallenge leaderboard]) | ||

==Creativity== | ==Creativity== | ||

| + | * See also: [AI creativity] | ||

* 2024-10: [https://arxiv.org/abs/2410.04265 AI as Humanity's Salieri: Quantifying Linguistic Creativity of Language Models via Systematic Attribution of Machine Text against Web Text] | * 2024-10: [https://arxiv.org/abs/2410.04265 AI as Humanity's Salieri: Quantifying Linguistic Creativity of Language Models via Systematic Attribution of Machine Text against Web Text] | ||

| + | * 2024-11: [https://openreview.net/pdf?id=fz969ahcvJ AidanBench: Evaluating Novel Idea Generation on Open-Ended Questions] ([https://github.com/aidanmclaughlin/AidanBench code]) | ||

| + | * 2024-12: [https://arxiv.org/abs/2412.17596 LiveIdeaBench: Evaluating LLMs' Scientific Creativity and Idea Generation with Minimal Context] | ||

| + | * [https://github.com/lechmazur/writing/ LLM Creative Story-Writing Benchmark] | ||

| + | |||

| + | ==Reasoning== | ||

| + | * [https://scale.com/leaderboard/enigma_eval ENIGMAEVAL]: "reasoning" leaderboard ([https://static.scale.com/uploads/654197dc94d34f66c0f5184e/EnigmaEval%20v4.pdf paper]) | ||

| + | * [https://bethgelab.github.io/sober-reasoning/ Sober Reasoning Leaderboard] | ||

| + | ** 2025-04: [https://arxiv.org/abs/2504.07086 A Sober Look at Progress in Language Model Reasoning: Pitfalls and Paths to Reproducibility] | ||

| + | |||

| + | ==Assistant/Agentic== | ||

| + | See: [[AI_Agents#Optimization|AI Agents: Optimization]] | ||

| + | * [https://arxiv.org/abs/2311.12983 GAIA: a benchmark for General AI Assistants] | ||

| + | * [https://www.galileo.ai/blog/agent-leaderboard Galileo AI] [https://huggingface.co/spaces/galileo-ai/agent-leaderboard Agent Leaderboard] | ||

| + | * [https://huggingface.co/spaces/smolagents/smolagents-leaderboard Smolagents LLM Leaderboard]: LLMs powering agents | ||

| + | * OpenAI [https://openai.com/index/paperbench/ PaperBench: Evaluating AI’s Ability to Replicate AI Research] ([https://cdn.openai.com/papers/22265bac-3191-44e5-b057-7aaacd8e90cd/paperbench.pdf paper], [https://github.com/openai/preparedness/tree/main/project/paperbench code]) | ||

| + | * 2025-06: [https://arxiv.org/abs/2506.22419 The Automated LLM Speedrunning Benchmark: Reproducing NanoGPT Improvements] | ||

| + | * [https://hal.cs.princeton.edu/ HAL: Holistic Agent Leaderboard] The standardized, cost-aware, and third-party leaderboard for evaluating agents | ||

| + | |||

| + | ==Science== | ||

| + | See: [[Science_Agents#Science_Benchmarks|Science Benchmarks]] | ||

Latest revision as of 08:11, 8 September 2025

Contents

General

- Models Table (lifearchitect.ai)

- Artificial Analysis

- Epoch AI

Lists of Benchmarks

Analysis of Methods

- 2025-04: The Leaderboard Illusion

Methods

- AidanBench: Evaluating Novel Idea Generation on Open-Ended Questions (code)

- Leaderboard

- Suggestion to use Borda count

- 2025-04: add Quasar Alpha, Optimus Alpha, Llama-4 Scout and Llama-4 Maverick

- ZebraLogic: On the Scaling Limits of LLMs for Logical Reasoning. Assess reasoning using puzzles of tunable complexity.

- 2025-08: Reinforcement Learning with Rubric Anchors (model)

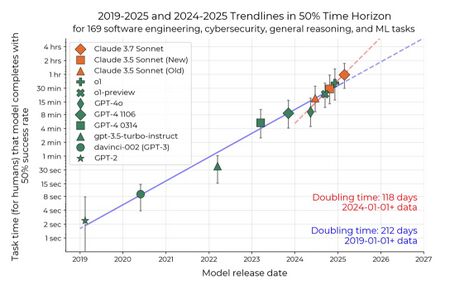

Task Length

- 2020-09: Ajeya Cotra: Draft report on AI timelines

- 2025-03: Measuring AI Ability to Complete Long Tasks Measuring AI Ability to Complete Long Tasks

Assess Specific Attributes

Various

- LMSYS: Human preference ranking leaderboard

- Tracking AI: "IQ" leaderboard

- LiveBench: A Challenging, Contamination-Free LLM Benchmark

- LLM Thematic Generalization Benchmark

Hallucination

- Vectara Hallucination Leaderboard

- LLM Confabulation (Hallucination) Leaderboard for RAG

- 2025-09: OpenAI: Why Language Models Hallucinate

Software/Coding

Math

Science

- 2025-07: SciArena: A New Platform for Evaluating Foundation Models in Scientific Literature Tasks (vote, data, code)

Visual

- 2024-06: Charting Gaps in Realistic Chart Understanding in Multimodal LLMs (preprint, leaderboard)

- 2025-03: Can Large Vision Language Models Read Maps Like a Human? MapBench

Conversation

- 2025-01: MultiChallenge: A Realistic Multi-Turn Conversation Evaluation Benchmark Challenging to Frontier LLMs (project, code, leaderboard)

Creativity

- See also: [AI creativity]

- 2024-10: AI as Humanity's Salieri: Quantifying Linguistic Creativity of Language Models via Systematic Attribution of Machine Text against Web Text

- 2024-11: AidanBench: Evaluating Novel Idea Generation on Open-Ended Questions (code)

- 2024-12: LiveIdeaBench: Evaluating LLMs' Scientific Creativity and Idea Generation with Minimal Context

- LLM Creative Story-Writing Benchmark

Reasoning

- ENIGMAEVAL: "reasoning" leaderboard (paper)

- Sober Reasoning Leaderboard

Assistant/Agentic

- GAIA: a benchmark for General AI Assistants

- Galileo AI Agent Leaderboard

- Smolagents LLM Leaderboard: LLMs powering agents

- OpenAI PaperBench: Evaluating AI’s Ability to Replicate AI Research (paper, code)

- 2025-06: The Automated LLM Speedrunning Benchmark: Reproducing NanoGPT Improvements

- HAL: Holistic Agent Leaderboard The standardized, cost-aware, and third-party leaderboard for evaluating agents

Science

See: Science Benchmarks