Difference between revisions of "AI predictions"

KevinYager (talk | contribs) (→Philosophy) |

KevinYager (talk | contribs) (→Surveys of Opinions/Predictions) |

||

| (171 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | =AGI Achievable= | + | =Capability Scaling= |

| + | * 2019-03: Rich Sutton: [https://www.cs.utexas.edu/~eunsol/courses/data/bitter_lesson.pdf The Bitter Lesson] | ||

| + | * 2020-09: Ajeya Cotra: [https://www.lesswrong.com/posts/KrJfoZzpSDpnrv9va/draft-report-on-ai-timelines Draft report on AI timelines] | ||

| + | * 2022-01: gwern: [https://gwern.net/scaling-hypothesis The Scaling Hypothesis] | ||

| + | * 2023-05: Richard Ngo: [https://www.lesswrong.com/posts/BoA3agdkAzL6HQtQP/clarifying-and-predicting-agi Clarifying and predicting AGI] | ||

| + | * 2024-06: Aidan McLaughlin: [https://yellow-apartment-148.notion.site/AI-Search-The-Bitter-er-Lesson-44c11acd27294f4495c3de778cd09c8d AI Search: The Bitter-er Lesson] | ||

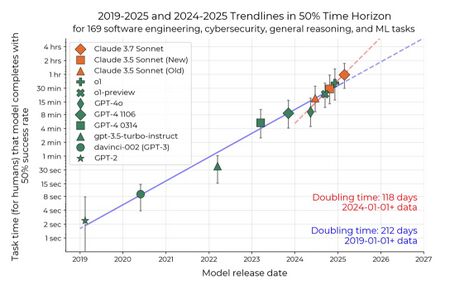

| + | * 2025-03: [https://arxiv.org/abs/2503.14499 Measuring AI Ability to Complete Long Tasks Measuring AI Ability to Complete Long Tasks] | ||

| + | ** 2025-04: [https://peterwildeford.substack.com/p/forecaster-reacts-metrs-bombshell Forecaster reacts: METR's bombshell paper about AI acceleration] New data supports an exponential AI curve, but lots of uncertainty remains | ||

| + | ** 2025-04: AI Digest: [https://theaidigest.org/time-horizons A new Moore's Law for AI agents] | ||

| + | [[Image:GmZHL8xWQAAtFlF.jpeg|450px]] | ||

| + | * 2025-04: [https://epoch.ai/blog/trends-in-ai-supercomputers Trends in AI Supercomputers] ([https://arxiv.org/abs/2504.16026 preprint]) | ||

| + | * [https://ai-timeline.org/ The Road to AGI] (timeline visualization) | ||

| + | * 2025-09: [https://arxiv.org/abs/2509.09677 The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs] | ||

| + | * 2025-09: [https://www.julian.ac/blog/2025/09/27/failing-to-understand-the-exponential-again/ Failing to Understand the Exponential, Again] | ||

| + | * 2026-02: Ryan Greenblatt: [https://www.lesswrong.com/posts/rRbDNQLfihiHbXytf/distinguish-between-inference-scaling-and-larger-tasks-use Distinguish between inference scaling and "larger tasks use more compute"] | ||

| + | |||

| + | ==Scaling Laws== | ||

| + | See: [[AI_understanding#Scaling_Laws|Scaling Laws]] | ||

| + | |||

| + | ==AGI Achievable== | ||

* Yoshua Bengio: [https://arxiv.org/abs/2310.17688 Managing extreme AI risks amid rapid progress] | * Yoshua Bengio: [https://arxiv.org/abs/2310.17688 Managing extreme AI risks amid rapid progress] | ||

* Leopold Aschenbrenner: [https://situational-awareness.ai/from-gpt-4-to-agi/#Counting_the_OOMs Situational Awareness: Counting the OOMs] | * Leopold Aschenbrenner: [https://situational-awareness.ai/from-gpt-4-to-agi/#Counting_the_OOMs Situational Awareness: Counting the OOMs] | ||

| Line 6: | Line 25: | ||

* Epoch AI: [https://epoch.ai/trends Machine Learning Trends] | * Epoch AI: [https://epoch.ai/trends Machine Learning Trends] | ||

* AI Digest: [https://theaidigest.org/progress-and-dangers How fast is AI improving?] | * AI Digest: [https://theaidigest.org/progress-and-dangers How fast is AI improving?] | ||

| + | * 2025-06: [https://80000hours.org/agi/guide/when-will-agi-arrive/ The case for AGI by 2030] | ||

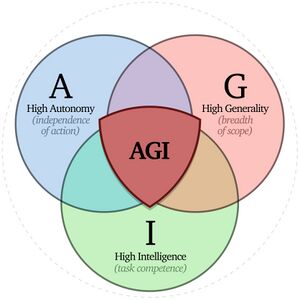

==AGI Definition== | ==AGI Definition== | ||

* 2023-11: Allan Dafoe, Shane Legg, et al.: [https://arxiv.org/abs/2311.02462 Levels of AGI for Operationalizing Progress on the Path to AGI] | * 2023-11: Allan Dafoe, Shane Legg, et al.: [https://arxiv.org/abs/2311.02462 Levels of AGI for Operationalizing Progress on the Path to AGI] | ||

* 2024-04: Bowen Xu: [https://arxiv.org/abs/2404.10731 What is Meant by AGI? On the Definition of Artificial General Intelligence] | * 2024-04: Bowen Xu: [https://arxiv.org/abs/2404.10731 What is Meant by AGI? On the Definition of Artificial General Intelligence] | ||

| + | * 2025-10: Dan Hendrycks et al.: [https://www.agidefinition.ai/paper.pdf A Definition of AGI] | ||

| + | * 2026-01: [https://arxiv.org/abs/2601.07364 On the universal definition of intelligence] | ||

| + | |||

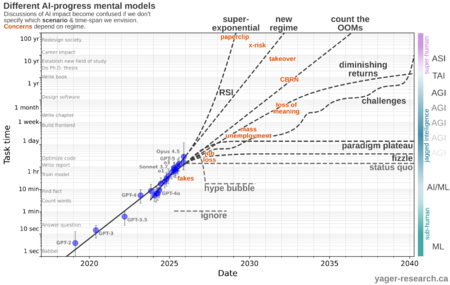

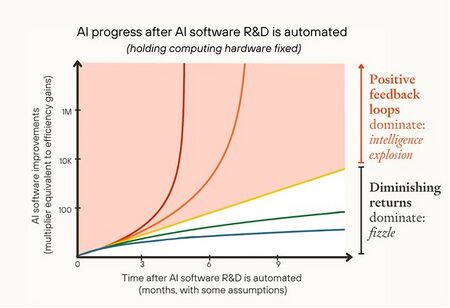

| + | ==Progress Models== | ||

| + | From [http://yager-research.ca/2025/04/ai-impact-predictions/ AI Impact Predictions]: | ||

| + | |||

| + | [[Image:AI impact models-2025 11 24.png|450px]] | ||

=Economic and Political= | =Economic and Political= | ||

| + | * 2019-11: [https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3482150 The Impact of Artificial Intelligence on the Labor Market] | ||

| + | * 2020-06: [https://www.openphilanthropy.org/research/modeling-the-human-trajectory/ Modeling the Human Trajectory] (GDP) | ||

| + | * 2021-06: [https://www.openphilanthropy.org/research/report-on-whether-ai-could-drive-explosive-economic-growth/ Report on Whether AI Could Drive Explosive Economic Growth] | ||

| + | * 2023-10: Marc Andreessen: [https://a16z.com/the-techno-optimist-manifesto/ The Techno-Optimist Manifesto] | ||

* 2023-12: [https://vitalik.eth.limo/general/2023/11/27/techno_optimism.html My techno-optimism]: "defensive acceleration" ([https://vitalik.eth.limo/index.html Vitalik Buterin]) | * 2023-12: [https://vitalik.eth.limo/general/2023/11/27/techno_optimism.html My techno-optimism]: "defensive acceleration" ([https://vitalik.eth.limo/index.html Vitalik Buterin]) | ||

* 2024-03: Noah Smith: [https://www.noahpinion.blog/p/plentiful-high-paying-jobs-in-the Plentiful, high-paying jobs in the age of AI: Comparative advantage is very subtle, but incredibly powerful.] ([https://x.com/liron/status/1768013030741475485 video]) | * 2024-03: Noah Smith: [https://www.noahpinion.blog/p/plentiful-high-paying-jobs-in-the Plentiful, high-paying jobs in the age of AI: Comparative advantage is very subtle, but incredibly powerful.] ([https://x.com/liron/status/1768013030741475485 video]) | ||

| Line 20: | Line 51: | ||

* 2024-12: [https://www.lesswrong.com/posts/KFFaKu27FNugCHFmh/by-default-capital-will-matter-more-than-ever-after-agi By default, capital will matter more than ever after AGI] (L Rudolf L) | * 2024-12: [https://www.lesswrong.com/posts/KFFaKu27FNugCHFmh/by-default-capital-will-matter-more-than-ever-after-agi By default, capital will matter more than ever after AGI] (L Rudolf L) | ||

* 2025-01: [https://lukedrago.substack.com/p/the-intelligence-curse The Intelligence Curse]: With AGI, powerful actors will lose their incentives to invest in people | * 2025-01: [https://lukedrago.substack.com/p/the-intelligence-curse The Intelligence Curse]: With AGI, powerful actors will lose their incentives to invest in people | ||

| + | ** Updated 2025-04: [https://intelligence-curse.ai/ The Intelligence Curse] (Luke Drago and Rudolf Laine) | ||

| + | *** [https://intelligence-curse.ai/pyramid/ Pyramid Replacement] | ||

| + | *** [https://intelligence-curse.ai/capital/ Capital, AGI, and Human Ambition] | ||

| + | *** [https://intelligence-curse.ai/defining/ Defining the Intelligence Curse] | ||

| + | *** [https://intelligence-curse.ai/shaping/ Shaping the Social Contract] | ||

| + | *** [https://intelligence-curse.ai/breaking/ Breaking the Intelligence Curse] | ||

| + | *** [https://intelligence-curse.ai/history/ History is Yours to Write] | ||

* 2025-01: Microsoft: [https://blogs.microsoft.com/on-the-issues/2025/01/03/the-golden-opportunity-for-american-ai/ The Golden Opportunity for American AI] | * 2025-01: Microsoft: [https://blogs.microsoft.com/on-the-issues/2025/01/03/the-golden-opportunity-for-american-ai/ The Golden Opportunity for American AI] | ||

* 2025-01: [https://www.maximum-progress.com/p/agi-will-not-make-labor-worthless AGI Will Not Make Labor Worthless] | * 2025-01: [https://www.maximum-progress.com/p/agi-will-not-make-labor-worthless AGI Will Not Make Labor Worthless] | ||

| Line 28: | Line 66: | ||

* 2025-02: [https://arxiv.org/abs/2502.11264 Strategic Wealth Accumulation Under Transformative AI Expectations] | * 2025-02: [https://arxiv.org/abs/2502.11264 Strategic Wealth Accumulation Under Transformative AI Expectations] | ||

* 2025-02: Tyler Cowen: [https://marginalrevolution.com/marginalrevolution/2025/02/why-i-think-ai-take-off-is-relatively-slow.html Why I think AI take-off is relatively slow] | * 2025-02: Tyler Cowen: [https://marginalrevolution.com/marginalrevolution/2025/02/why-i-think-ai-take-off-is-relatively-slow.html Why I think AI take-off is relatively slow] | ||

| + | * 2025-03: Epoch AI: [https://epoch.ai/gradient-updates/most-ai-value-will-come-from-broad-automation-not-from-r-d Most AI value will come from broad automation, not from R&D] | ||

| + | ** The primary economic impact of AI will be its ability to broadly automate labor | ||

| + | ** Automating AI R&D alone likely won’t dramatically accelerate AI progress | ||

| + | ** Fully automating R&D requires a very broad set of abilities | ||

| + | ** AI takeoff will likely be diffuse and salient | ||

| + | * 2025-03: [https://www.anthropic.com/news/anthropic-economic-index-insights-from-claude-sonnet-3-7 Anthropic Economic Index: Insights from Claude 3.7 Sonnet] | ||

| + | * 2025-04: [https://inferencemagazine.substack.com/p/will-there-be-extreme-inequality Will there be extreme inequality from AI?] | ||

| + | * 2025-04: [https://www.anthropic.com/research/impact-software-development Anthropic Economic Index: AI’s Impact on Software Development] | ||

| + | * 2025-05: [https://www.theguardian.com/books/2025/may/04/the-big-idea-can-we-stop-ai-making-humans-obsolete Better at everything: how AI could make human beings irrelevant] | ||

| + | * 2025-05: Forethought: [https://www.forethought.org/research/the-industrial-explosion The Industrial Explosion] | ||

| + | * 2025-05: [https://arxiv.org/abs/2505.20273 Ten Principles of AI Agent Economics] | ||

| + | * 2025-07: [https://substack.com/home/post/p-167879696 What Economists Get Wrong about AI] They ignore innovation effects, use outdated capability assumptions, and miss the robotics revolution | ||

| + | * 2025-07: [https://www.nber.org/books-and-chapters/economics-transformative-ai/we-wont-be-missed-work-and-growth-era-agi We Won't Be Missed: Work and Growth in the Era of AGI] | ||

| + | * 2025-07: [https://www.nber.org/papers/w34034 The Economics of Bicycles for the Mind] | ||

| + | * 2025-09: [https://conference.nber.org/conf_papers/f227491.pdf Genius on Demand: The Value of Transformative Artificial Intelligence] | ||

| + | * 2025-10: [https://peterwildeford.substack.com/p/ai-is-probably-not-a-bubble AI is probably not a bubble: AI companies have revenue, demand, and paths to immense value] | ||

| + | * 2025-11: [https://windowsontheory.org/2025/11/04/thoughts-by-a-non-economist-on-ai-and-economics/ Thoughts by a non-economist on AI and economics] | ||

| + | * 2025-11: [https://www.nber.org/papers/w34444 Artificial Intelligence, Competition, and Welfare] | ||

| + | * 2025-11: [https://www.anthropic.com/research/estimating-productivity-gains Estimating AI productivity gains from Claude conversations] (Anthropic) | ||

| + | * 2025-12: [https://benjamintodd.substack.com/p/how-ai-driven-feedback-loops-could How AI-driven feedback loops could make things very crazy, very fast] | ||

| + | * 2025-12: [https://philiptrammell.com/static/Existential_Risk_and_Growth.pdf Existential Risk and Growth] (Philip Trammell and Leopold Aschenbrenner) | ||

| + | * 2026-01: [https://www.anthropic.com/research/anthropic-economic-index-january-2026-report Anthropic Economic Index: new building blocks for understanding AI use] | ||

| + | * 2026-01: [https://www.anthropic.com/research/economic-index-primitives Anthropic Economic Index report: economic primitives] | ||

| + | * 2026-02: Nate Silver: [https://www.natesilver.net/p/the-singularity-wont-be-gentle The singularity won't be gentle: If AI is even half as transformational as Silicon Valley assumes, politics will never be the same again] | ||

==Job Loss== | ==Job Loss== | ||

| Line 45: | Line 107: | ||

* 2025-01: [https://scholarspace.manoa.hawaii.edu/server/api/core/bitstreams/4f39375d-59c2-4c4a-b394-f3eed7858c80/content AI and Freelancers: Has the Inflection Point Arrived?] | * 2025-01: [https://scholarspace.manoa.hawaii.edu/server/api/core/bitstreams/4f39375d-59c2-4c4a-b394-f3eed7858c80/content AI and Freelancers: Has the Inflection Point Arrived?] | ||

* 2025-01: [https://www.aporiamagazine.com/p/yes-youre-going-to-be-replaced Yes, you're going to be replaced: So much cope about AI] | * 2025-01: [https://www.aporiamagazine.com/p/yes-youre-going-to-be-replaced Yes, you're going to be replaced: So much cope about AI] | ||

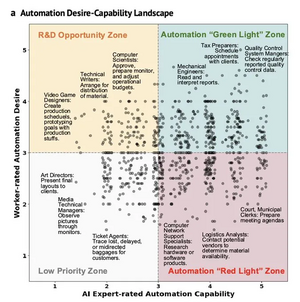

| + | * 2025-03: [https://commonplace.org/2025/03/20/will-ai-automate-away-your-job/ Will AI Automate Away Your Job? The time-horizon model explains the future of the technology] | ||

| + | * 2025-05: [https://www.forbes.com/sites/jackkelly/2025/05/04/its-time-to-get-concerned-klarna-ups-duolingo-cisco-and-many-other-companies-are-replacing-workers-with-ai/ It’s Time To Get Concerned, Klarna, UPS, Duolingo, Cisco, And Many Other Companies Are Replacing Workers With AI] | ||

| + | * 2025-05: [https://time.com/7289692/when-ai-replaces-workers/ What Happens When AI Replaces Workers?] | ||

| + | * 2025-05: [https://www.oxfordeconomics.com/resource/educated-but-unemployed-a-rising-reality-for-us-college-grads/ Educated but unemployed, a rising reality for US college grads] Structural shifts in tech hiring and the growing impact of AI are driving higher unemployment among recent college graduates | ||

| + | * 2025-05: NY Times: [https://www.nytimes.com/2025/05/30/technology/ai-jobs-college-graduates.html?unlocked_article_code=1.LE8.LlC6.eT5XcpA9hxC2&smid=url-share For Some Recent Graduates, the A.I. Job Apocalypse May Already Be Here] The unemployment rate for recent college graduates has jumped as companies try to replace entry-level workers with artificial intelligence | ||

| + | * 2025-06: [https://80000hours.org/agi/guide/skills-ai-makes-valuable/ How not to lose your job to AI] The skills AI will make more valuable (and how to learn them) | ||

| + | * 2025-06: [https://arxiv.org/abs/2506.06576 Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce] | ||

| + | [[Image:0dab4c86-882d-4095-9d12-d19684ed5184 675x680.png|300px]] | ||

| + | * 2025-07: Harvard Business Review: [https://hbr.org/2025/06/what-gets-measured-ai-will-automate What Gets Measured, AI Will Automate] | ||

| + | * 2025-08: [https://digitaleconomy.stanford.edu/publications/canaries-in-the-coal-mine/ Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence] | ||

| + | * 2025-10: [https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5560401 Performance or Principle: Resistance to Artificial Intelligence in the U.S. Labor Market] | ||

| + | * 2025-10: [https://www.siliconcontinent.com/p/the-ai-becker-problem The AI Becker problem: Who will train the next generation?] | ||

| + | * 2026-01: [https://papers.ssrn.com/sol3/papers.cfm?abstract_id=6134506 AI, Automation, and Expertise] | ||

| + | * 2026-02: [https://arachnemag.substack.com/p/the-jevons-paradox-for-intelligence The Jevons Paradox for Intelligence: Fears of AI-induced job loss could not be more wrong] | ||

| + | |||

| + | ==Productivity Impact== | ||

| + | * 2025-05: [https://www.nber.org/papers/w33777 Large Language Models, Small Labor Market Effects] | ||

| + | ** Significant uptake, but very little economic impact so far | ||

| + | * 2026-02: [https://www.ft.com/content/4b51d0b4-bbfe-4f05-b50a-1d485d419dc5 The AI productivity take-off is finally visible] ([https://x.com/erikbryn/status/2023075588974735869?s=20 Erik Brynjolfsson]) | ||

| + | ** Businesses are finally beginning to reap some of AI's benefits. | ||

| + | * 2026-02: New York Times: [https://www.nytimes.com/2026/02/18/opinion/ai-software.html The A.I. Disruption We’ve Been Waiting for Has Arrived] | ||

| + | |||

| + | ==National Security== | ||

| + | * 2025-04: Jeremie Harris and Edouard Harris: [https://superintelligence.gladstone.ai/ America’s Superintelligence Project] | ||

| + | |||

| + | ==AI Manhattan Project== | ||

| + | * 2024-06: [https://situational-awareness.ai/wp-content/uploads/2024/06/situationalawareness.pdf Situational Awareness] ([https://www.forourposterity.com/ Leopold Aschenbrenner]) - [https://www.lesswrong.com/posts/nP5FFYFjtY8LgWymt/quotes-from-leopold-aschenbrenner-s-situational-awareness select quotes], [https://www.youtube.com/watch?v=zdbVtZIn9IM podcast], [https://danielmiessler.com/p/podcast-summary-dwarkesh-vs-leopold-aschenbrenner text summary of podcast] | ||

| + | * 2024-10: [https://thezvi.substack.com/p/ai-88-thanks-for-the-memos?open=false#%C2%A7thanks-for-the-memos-introduction-and-competitiveness White House Memo calls for action on AI] | ||

| + | * 2024-11: [https://www.uscc.gov/annual-report/2024-annual-report-congress 2024 Annual Report to Congress]: [https://www.reuters.com/technology/artificial-intelligence/us-government-commission-pushes-manhattan-project-style-ai-initiative-2024-11-19/ calls] for "Manhattan Project-style" effort | ||

| + | * 2025-05-29: [https://x.com/ENERGY/status/1928085878561272223 DoE Tweet]: "AI is the next Manhattan Project, and THE UNITED STATES WILL WIN. 🇺🇸" | ||

| + | * 2025-07: [https://epoch.ai/gradient-updates/how-big-could-an-ai-manhattan-project-get How big could an “AI Manhattan Project” get?] | ||

| + | |||

| + | =Near-term= | ||

| + | * 2021-08: Daniel Kokotajlo: [https://www.lesswrong.com/posts/6Xgy6CAf2jqHhynHL/what-2026-looks-like What 2026 looks like] | ||

| + | * 2025-02: Sam Altman: [https://blog.samaltman.com/three-observations Three Observations] | ||

| + | *# The intelligence of an AI model roughly equals the log of the resources used to train and run it. | ||

| + | *# The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use | ||

| + | *# The socioeconomic value of linearly increasing intelligence is super-exponential in nature | ||

| + | * 2025-03: [https://www.pathwaysai.org/p/glimpses-of-ai-progess Glimpses of AI Progress: Mental models for fast times] | ||

| + | * 2025-03: [https://www.nature.com/articles/s41598-025-92190-7 Navigating artificial general intelligence development: societal, technological, ethical, and brain-inspired pathways] | ||

| + | * 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: [https://ai-2027.com/ AI 2027] ([https://ai-2027.com/scenario.pdf pdf]) | ||

| + | ** 2025-07: Video: [https://www.youtube.com/watch?v=5KVDDfAkRgc Are We 3 Years From AI Disaster? A Rigorous Forecast] | ||

| + | * 2025-04: Stanford HAI: [https://hai-production.s3.amazonaws.com/files/hai_ai_index_report_2025.pdf Artificial Intelligence Index Report 2025] | ||

| + | * 2025-04: Arvind Narayananand Sayash Kapoor: [https://kfai-documents.s3.amazonaws.com/documents/c3cac5a2a7/AI-as-Normal-Technology---Narayanan---Kapoor.pdf AI as Normal Technology] | ||

| + | * 2025-04: Dwarkesh Patel: [https://www.dwarkesh.com/p/questions-about-ai Questions about the Future of AI] | ||

| + | * 2025-05: [https://www.bondcap.com/report/pdf/Trends_Artificial_Intelligence.pdf Trends – Artificial Intelligence] | ||

| + | * 2025-06: IdeaFoundry: [https://ideafoundry.substack.com/p/evolution-vs-extinction-the-choice Evolution vs. Extinction: The Choice is Ours] The next 18 months will decide whether AI ends us or evolves us | ||

| + | * 2025-07: [https://cfg.eu/advanced-ai-possible-futures/ Advanced AI: Possible futures] Five scenarios for how the AI-transition could unfold | ||

| + | * 2025-11: [https://android-dreams.ai/ Android Dreams] | ||

| + | * 2026-02: [https://www.citriniresearch.com/ Citrini]: [https://www.citriniresearch.com/p/2028gic The 2028 Global Intelligence Crisis: A Thought Exercise in Financial History, from the Future] | ||

| + | |||

| + | ==Insightful Analysis of Current State== | ||

| + | * 2025-11: Andy Masley: [https://andymasley.substack.com/p/the-lump-of-cognition-fallacy The lump of cognition fallacy: The extended mind as the advance of civilization] | ||

| + | * 2026-02: Eric Jang: [https://evjang.com/2026/02/04/rocks.html As Rocks May Think] | ||

| + | * 2026-02: Matt Shumer: [https://x.com/mattshumer_/status/2021256989876109403 Something Big Is Happening] | ||

| + | * 2026-02: Minh Pham: [https://x.com/buckeyevn/status/2014171253045960803?s=20 Why Most Agent Harnesses Are Not Bitter Lesson Pilled] | ||

=Overall= | =Overall= | ||

| + | * 1993: [https://en.wikipedia.org/wiki/Vernor_Vinge Vernor Vinge]: [https://edoras.sdsu.edu/~vinge/misc/singularity.html The Coming Technological Singularity: How to Survive in the Post-Human Era] | ||

| + | * 2025-03: Kevin Roose (New York Times): [https://www.nytimes.com/2025/03/14/technology/why-im-feeling-the-agi.html?unlocked_article_code=1.304.TIEy.SmNhKYO4e9c7&smid=url-share Powerful A.I. Is Coming. We’re Not Ready.] Three arguments for taking progress toward artificial general intelligence, or A.G.I., more seriously — whether you’re an optimist or a pessimist. | ||

| + | * 2025-03: Nicholas Carlini: [https://nicholas.carlini.com/writing/2025/thoughts-on-future-ai.html My Thoughts on the Future of "AI"]: "I have very wide error bars on the potential future of large language models, and I think you should too." | ||

| + | * 2025-06: Sam Altman: [https://blog.samaltman.com/the-gentle-singularity The Gentle Singularity] | ||

| + | |||

==Surveys of Opinions/Predictions== | ==Surveys of Opinions/Predictions== | ||

| + | * 2016-06: [https://aiimpacts.org/2016-expert-survey-on-progress-in-ai/ 2016 Expert Survey on Progress in AI] | ||

| + | ** 2023-03: [https://aiimpacts.org/scoring-forecasts-from-the-2016-expert-survey-on-progress-in-ai/ Scoring forecasts from the 2016 “Expert Survey on Progress in AI”] | ||

| + | * 2022-10: Forecasting Research Institute: [https://forecastingresearch.org/near-term-xpt-accuracy Assessing Near-Term Accuracy in the Existential Risk Persuasion Tournament] | ||

| + | ** 2025-09: Ethan Mollick: [https://x.com/emollick/status/1962859757674344823 Progress is ahead of expectations] | ||

| + | * 2023-08: [https://wiki.aiimpacts.org/ai_timelines/predictions_of_human-level_ai_timelines/ai_timeline_surveys/2023_expert_survey_on_progress_in_ai 2023 Expert Survey on Progress in AI] | ||

| + | * 2024-01: [https://arxiv.org/abs/2401.02843 Thousands of AI Authors on the Future of AI] | ||

* 2025-02: [https://arxiv.org/abs/2502.14870 Why do Experts Disagree on Existential Risk and P(doom)? A Survey of AI Experts] | * 2025-02: [https://arxiv.org/abs/2502.14870 Why do Experts Disagree on Existential Risk and P(doom)? A Survey of AI Experts] | ||

| + | * 2025-02: Nicholas Carlini: [https://nicholas.carlini.com/writing/2025/forecasting-ai-2025-update.html AI forecasting retrospective: you're (probably) over-confident] | ||

| + | * 2025-04: Helen Toner: [https://helentoner.substack.com/p/long-timelines-to-advanced-ai-have "Long" timelines to advanced AI have gotten crazy short] | ||

| + | * 2025-05: [https://theaidigest.org/ai2025-analysis-may AI 2025 Forecasts - May Update] | ||

| + | * 2026-02: [https://www.nature.com/articles/s41598-026-39070-w Lay beliefs about the badness, likelihood, and importance of human extinction] | ||

==Bad Outcomes== | ==Bad Outcomes== | ||

| + | * [https://pauseai.info/pdoom List of p(doom) values] | ||

* 2019-03: [https://www.alignmentforum.org/posts/HBxe6wdjxK239zajf/what-failure-looks-like What failure looks like] | * 2019-03: [https://www.alignmentforum.org/posts/HBxe6wdjxK239zajf/what-failure-looks-like What failure looks like] | ||

| + | * 2023-03: gwern: [https://gwern.net/fiction/clippy It Looks Like You’re Trying To Take Over The World] | ||

* 2025-01: [https://arxiv.org/abs/2501.16946 Gradual Disempowerment: Systemic Existential Risks from Incremental AI Development] ([https://gradual-disempowerment.ai/ web version]) | * 2025-01: [https://arxiv.org/abs/2501.16946 Gradual Disempowerment: Systemic Existential Risks from Incremental AI Development] ([https://gradual-disempowerment.ai/ web version]) | ||

** 2025-02: [https://thezvi.substack.com/p/the-risk-of-gradual-disempowerment The Risk of Gradual Disempowerment from AI] | ** 2025-02: [https://thezvi.substack.com/p/the-risk-of-gradual-disempowerment The Risk of Gradual Disempowerment from AI] | ||

| + | ** 2025-05: [https://www.lesswrong.com/posts/GAv4DRGyDHe2orvwB/gradual-disempowerment-concrete-research-projects Gradual Disempowerment: Concrete Research Projects] | ||

| + | * 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: [https://ai-2027.com/ AI 2027] ([https://ai-2027.com/scenario.pdf pdf]) | ||

| + | * 2025-04: [https://www.forethought.org/research/ai-enabled-coups-how-a-small-group-could-use-ai-to-seize-power AI-Enabled Coups: How a Small Group Could Use AI to Seize Power] | ||

| + | * 2025-09: [https://doctrines.ai/ The three main doctrines on the future of AI] | ||

| + | ** '''Dominance doctrine:''' First actor to create advanced AI will attain overwhelming strategic superiority | ||

| + | ** '''Extinction doctrine:''' Humanity will lose control of ASI, leading to extinction or permanent disempowerment | ||

| + | ** '''Replacement doctrine:''' AI will automate human tasks, but without fundamentally reshaping or ending civilization | ||

| + | * 2025-09: Sean ÓhÉigeartaigh: [https://www.cambridge.org/core/journals/cambridge-prisms-extinction/article/extinction-of-the-human-species-what-could-cause-it-and-how-likely-is-it-to-occur/D8816A79BEF5A4C30A3E44FD8D768622 Extinction of the human species: What could cause it and how likely is it to occur?] | ||

| + | |||

| + | ==Intelligence Explosion== | ||

| + | * 2023-06: [https://www.openphilanthropy.org/research/what-a-compute-centric-framework-says-about-takeoff-speeds/ What a Compute-Centric Framework Says About Takeoff Speeds] | ||

| + | ** [https://takeoffspeeds.com/ takeoffspeeds.com simulator] | ||

| + | * 2025-02: [https://www.forethought.org/research/three-types-of-intelligence-explosion Three Types of Intelligence Explosion] | ||

| + | * 2025-03: Future of Life Institute: [https://futureoflife.org/ai/are-we-close-to-an-intelligence-explosion/ Are we close to an intelligence explosion?] AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable. | ||

| + | * 2025-03: Forethought: [https://www.forethought.org/research/will-ai-r-and-d-automation-cause-a-software-intelligence-explosion Will AI R&D Automation Cause a Software Intelligence Explosion?] | ||

| + | [[Image:Gm-1jugbYAAtq Y.jpeg|450px]] | ||

| + | * 2025-05: [https://www.thelastinvention.ai/ The Last Invention] Why Humanity’s Final Creation Changes Everything | ||

| + | * 2025-08: [https://www.forethought.org/research/how-quick-and-big-would-a-software-intelligence-explosion-be How quick and big would a software intelligence explosion be?] | ||

| + | |||

| + | ==Superintelligence== | ||

| + | * 2024-10: [http://yager-research.ca/2024/10/how-smart-will-asi-be/ How Smart will ASI be?] | ||

| + | * 2024-11: [http://yager-research.ca/2024/11/concise-argument-for-asi-risk/ Concise Argument for ASI Risk] | ||

| + | * 2025-03: [https://dynomight.net/smart/ Limits of smart] | ||

| + | * 2025-05: [https://timfduffy.substack.com/p/the-limits-of-superintelligence?manualredirect= The Limits of Superintelligence] | ||

| + | |||

| + | ==Long-range/Philosophy== | ||

| + | * 2023-03: Dan Hendrycks: [https://arxiv.org/abs/2303.16200 Natural Selection Favors AIs over Humans] | ||

=Psychology= | =Psychology= | ||

* 2025-01: [https://longerramblings.substack.com/p/a-defence-of-slowness-at-the-end A defence of slowness at the end of the world] | * 2025-01: [https://longerramblings.substack.com/p/a-defence-of-slowness-at-the-end A defence of slowness at the end of the world] | ||

| − | =Science & Technology Improvements= | + | =Positives & Optimism= |

| + | ==Science & Technology Improvements== | ||

| + | * 2023-05: [https://www.planned-obsolescence.org/author/kelsey/ Kelsey Piper]: [https://www.planned-obsolescence.org/the-costs-of-caution/ The costs of caution] | ||

* 2024-09: Sam Altman: [https://ia.samaltman.com/ The Intelligence Age] | * 2024-09: Sam Altman: [https://ia.samaltman.com/ The Intelligence Age] | ||

* 2024-10: Dario Amodei: [https://darioamodei.com/machines-of-loving-grace Machines of Loving Grace] | * 2024-10: Dario Amodei: [https://darioamodei.com/machines-of-loving-grace Machines of Loving Grace] | ||

* 2024-11: Google DeepMind: [https://www.aipolicyperspectives.com/p/a-new-golden-age-of-discovery A new golden age of discovery] | * 2024-11: Google DeepMind: [https://www.aipolicyperspectives.com/p/a-new-golden-age-of-discovery A new golden age of discovery] | ||

| + | * 2025-03: [https://finmoorhouse.com/ Fin Moorhouse], [https://www.williammacaskill.com/ Will MacAskill]: [https://www.forethought.org/research/preparing-for-the-intelligence-explosion Preparing for the Intelligence Explosion] | ||

| + | |||

| + | ==Social== | ||

| + | * 2025-09: [https://blog.cosmos-institute.org/p/coasean-bargaining-at-scale Coasean Bargaining at Scale]: Decentralization, coordination, and co-existence with AGI | ||

| + | * 2025-10: [https://www.nber.org/system/files/chapters/c15309/c15309.pdf#page=15.23 The Coasean Singularity? Demand, Supply, and Market Design with AI Agents] | ||

| + | |||

| + | ==Post-scarcity Society== | ||

| + | * 2004: Eliezer Yudkowsky (MIRI): [https://intelligence.org/files/CEV.pdf Coherent Extrapolated Volition] and [https://www.lesswrong.com/s/d3WgHDBAPYYScp5Em/p/K4aGvLnHvYgX9pZHS Fun Theory] | ||

| + | * 2019: John Danaher: [https://www.jstor.org/stable/j.ctvn5txpc Automation and Utopia: Human Flourishing in a World Without Work] | ||

| + | |||

| + | ==The Grand Tradeoff== | ||

| + | * 2026-02: Nick Bostrom: [https://nickbostrom.com/optimal.pdf Optimal Timing for Superintelligence: Mundane Considerations for Existing People] | ||

=Plans= | =Plans= | ||

| Line 67: | Line 243: | ||

* Marius Hobbhahn: [https://www.lesswrong.com/posts/bb5Tnjdrptu89rcyY/what-s-the-short-timeline-plan What’s the short timeline plan?] | * Marius Hobbhahn: [https://www.lesswrong.com/posts/bb5Tnjdrptu89rcyY/what-s-the-short-timeline-plan What’s the short timeline plan?] | ||

* [https://cfg.eu/building-cern-for-ai/ Building CERN for AI: An institutional blueprint] | * [https://cfg.eu/building-cern-for-ai/ Building CERN for AI: An institutional blueprint] | ||

| + | * [https://arxiv.org/abs/2503.05710 AGI, Governments, and Free Societies] | ||

| + | * [https://controlai.com/ Control AI]: [https://controlai.com/dip The Direct Institutional Plan] | ||

| + | * Luke Drago and L Rudolf L: [https://lukedrago.substack.com/p/the-use-of-knowledge-in-agi-society?triedRedirect=true The use of knowledge in (AGI) society]: How to build to break the [https://lukedrago.substack.com/p/the-intelligence-curse intelligence curse] | ||

| + | * [https://www.agisocialcontract.org/ AGI Social Contract] | ||

| + | ** [https://www.agisocialcontract.org/forging-a-new-agi-social-contract Forging A New AGI Social Contract] | ||

| + | * Yoshua Bengio: [https://time.com/7283507/safer-ai-development/ A Potential Path to Safer AI Development] | ||

| + | ** 2025-02: [https://arxiv.org/abs/2502.15657 Superintelligent Agents Pose Catastrophic Risks: Can Scientist AI Offer a Safer Path?] | ||

| + | * 2026-01: Dario Amodei: [https://www.darioamodei.com/essay/the-adolescence-of-technology The Adolescence of Technology: Confronting and Overcoming the Risks of Powerful AI] | ||

| + | * 2026-02: Ryan Greenblatt: [https://www.lesswrong.com/posts/vjAM7F8vMZS7oRrrh/how-do-we-more-safely-defer-to-ais How do we (more) safely defer to AIs?] | ||

==Philosophy== | ==Philosophy== | ||

| Line 86: | Line 271: | ||

** [https://x.com/AnthonyNAguirre/status/1898023049930457468 2025-03]: [https://keepthefuturehuman.ai/ Keep The Future Human] | ** [https://x.com/AnthonyNAguirre/status/1898023049930457468 2025-03]: [https://keepthefuturehuman.ai/ Keep The Future Human] | ||

[[Image:GlchEeObwAQ88NK.jpeg|300px]] | [[Image:GlchEeObwAQ88NK.jpeg|300px]] | ||

| + | * 2025-04: Scott Alexander (Astral Codex Ten): [https://www.astralcodexten.com/p/the-colors-of-her-coat The Colors Of Her Coat] (response to [https://www.theintrinsicperspective.com/p/welcome-to-the-semantic-apocalypse semantic apocalypse] and semantic satiation) | ||

| + | * 2025-05: Helen Toner: [https://www.ai-frontiers.org/articles/were-arguing-about-ai-safety-wrong We’re Arguing About AI Safety Wrong]: Dynamism vs. stasis is a clearer lens for criticizing controversial AI safety prescriptions | ||

| + | * 2025-05: Joe Carlsmith: [https://joecarlsmith.substack.com/p/the-stakes-of-ai-moral-status The stakes of AI moral status] | ||

| + | |||

| + | ==Research== | ||

| + | * 2025-05: [https://www.lesswrong.com/posts/GAv4DRGyDHe2orvwB/gradual-disempowerment-concrete-research-projects Gradual Disempowerment: Concrete Research Projects] | ||

==Alignment== | ==Alignment== | ||

| − | * [https://static1.squarespace.com/static/65392ca578eee444c445c9de/t/6606f95edb20e8118074a344/1711733370985/human-values-and-alignment-29MAR2024.pdf What are human values, and how do we align AI to them?] ([https://meaningalignment.substack.com/p/0480e023-98c0-4633-a604-990d3ac880ac blog]) | + | * 2023-03: Leopold Aschenbrenner: [https://www.forourposterity.com/nobodys-on-the-ball-on-agi-alignment/ Nobody’s on the ball on AGI alignment] |

| − | * Joe Carlsmith | + | * 2024-03: [https://static1.squarespace.com/static/65392ca578eee444c445c9de/t/6606f95edb20e8118074a344/1711733370985/human-values-and-alignment-29MAR2024.pdf What are human values, and how do we align AI to them?] ([https://meaningalignment.substack.com/p/0480e023-98c0-4633-a604-990d3ac880ac blog]) |

| − | *# [https://joecarlsmith.substack.com/p/what-is-it-to-solve-the-alignment What is it to solve the alignment problem?] Also: to avoid it? Handle it? Solve it forever? Solve it completely? | + | * 2025: Joe Carlsmith: [https://joecarlsmith.substack.com/p/how-do-we-solve-the-alignment-problem How do we solve the alignment problem?] Introduction to an essay series on paths to safe, useful superintelligence |

| − | *# [https://joecarlsmith.substack.com/p/when-should-we-worry-about-ai-power When should we worry about AI power-seeking?] | + | *# [https://joecarlsmith.substack.com/p/what-is-it-to-solve-the-alignment What is it to solve the alignment problem?] Also: to avoid it? Handle it? Solve it forever? Solve it completely? ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/16617671-what-is-it-to-solve-the-alignment-problem audio version]) |

| + | *# [https://joecarlsmith.substack.com/p/when-should-we-worry-about-ai-power When should we worry about AI power-seeking?] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/16651469-when-should-we-worry-about-ai-power-seeking audio version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/paths-and-waystations-in-ai-safety Paths and waystations in AI safety] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/16768804-paths-and-waystations-in-ai-safety audio version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/ai-for-ai-safety AI for AI safety] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/16790183-ai-for-ai-safety audio version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/can-we-safely-automate-alignment Can we safely automate alignment research?] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/17069901-can-we-safely-automate-alignment-research audio version], [https://joecarlsmith.substack.com/p/video-and-transcript-of-talk-on-automating?utm_source=post-email-title&publication_id=1022275&post_id=162375391&utm_campaign=email-post-title&isFreemail=true&r=5av1bk&triedRedirect=true&utm_medium=email video version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/giving-ais-safe-motivations?utm_source=post-email-title&publication_id=1022275&post_id=171250683&utm_campaign=email-post-title&isFreemail=true&r=5av1bk&triedRedirect=true&utm_medium=email Giving AIs safe motivations] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/17686921-giving-ais-safe-motivations audio version]) | ||

| + | *# [https://joecarlsmith.com/2025/09/29/controlling-the-options-ais-can-pursue Controlling the options AIs can pursue] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/17909401-controlling-the-options-ais-can-pursue audio version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/how-human-like-do-safe-ai-motivations?utm_source=post-email-title&publication_id=1022275&post_id=178666988&utm_campaign=email-post-title&isFreemail=true&r=5av1bk&triedRedirect=true&utm_medium=email How human-like do safe AI motivations need to be?] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/18175429-how-human-like-do-safe-ai-motivations-need-to-be audio version]) | ||

| + | *# [https://joecarlsmith.substack.com/p/building-ais-that-do-human-like-philosophy Building AIs that do human-like philosophy: AIs will face philosophical questions humans can't answer for them] ([https://joecarlsmithaudio.buzzsprout.com/2034731/episodes/18591342-building-ais-that-do-human-like-philosophy audio version]) | ||

| + | * 2025-04: Dario Amodei: [https://www.darioamodei.com/post/the-urgency-of-interpretability The Urgency of Interpretability] | ||

| + | |||

| + | ==Strategic/Technical== | ||

| + | * 2025-03: [https://resilience.baulab.info/docs/AI_Action_Plan_RFI.pdf AI Dominance Requires Interpretability and Standards for Transparency and Security] | ||

| + | * 2026-02: [https://www.gap-map.org/capabilities/?sort=bottlenecks Fundamental Development Gap Map v1.0] | ||

==Strategic/Policy== | ==Strategic/Policy== | ||

| − | * Amanda Askell, Miles Brundage, Gillian Hadfield: [https://arxiv.org/abs/1907.04534 The Role of Cooperation in Responsible AI Development] | + | * 2015-03: Sam Altman: [https://blog.samaltman.com/machine-intelligence-part-2 Machine intelligence, part 2] |

| − | * Dan Hendrycks, Eric Schmidt, Alexandr Wang: [https://www.nationalsecurity.ai/ Superintelligence Strategy] | + | * 2019-07: Amanda Askell, Miles Brundage, Gillian Hadfield: [https://arxiv.org/abs/1907.04534 The Role of Cooperation in Responsible AI Development] |

| + | * 2025-03: Dan Hendrycks, Eric Schmidt, Alexandr Wang: [https://www.nationalsecurity.ai/ Superintelligence Strategy] | ||

** [https://www.nationalsecurity.ai/chapter/executive-summary Executive Summary] | ** [https://www.nationalsecurity.ai/chapter/executive-summary Executive Summary] | ||

** [https://www.nationalsecurity.ai/chapter/introduction Introduction] | ** [https://www.nationalsecurity.ai/chapter/introduction Introduction] | ||

| Line 104: | Line 309: | ||

** [https://www.nationalsecurity.ai/chapter/conclusion Conclusion] | ** [https://www.nationalsecurity.ai/chapter/conclusion Conclusion] | ||

** [https://www.nationalsecurity.ai/chapter/appendix Appendix FAQs] | ** [https://www.nationalsecurity.ai/chapter/appendix Appendix FAQs] | ||

| + | * Anthony Aguirre: [https://keepthefuturehuman.ai/ Keep The Future Human] ([https://keepthefuturehuman.ai/essay/ essay]) | ||

| + | ** [https://www.youtube.com/watch?v=zeabrXV8zNE The 4 Rules That Could Stop AI Before It’s Too Late (video)] (2025) | ||

| + | **# Oversight: Registration required for training >10<sup>25</sup> FLOP and inference >10<sup>19</sup> FLOP/s (~1,000 B200 GPUs @ $25M). Build cryptographic licensing into hardware. | ||

| + | **# Computation Limits: Ban on training models >10<sup>27</sup> FLOP or inference >10<sup>20</sup> FLOP/s. | ||

| + | **# Strict Liability: Hold AI companies responsible for outcomes. | ||

| + | **# Tiered Regulation: Low regulation on tool-AI, strictest regulation on AGI (general, capable, autonomous systems). | ||

| + | * 2025-04: Helen Toner: [https://helentoner.substack.com/p/nonproliferation-is-the-wrong-approach?source=queue Nonproliferation is the wrong approach to AI misuse] | ||

| + | * 2025-04: MIRI: [https://techgov.intelligence.org/research/ai-governance-to-avoid-extinction AI Governance to Avoid Extinction: The Strategic Landscape and Actionable Research Questions] | ||

| + | * 2025-05: [https://writing.antonleicht.me/p/the-new-ai-policy-frontier The New AI Policy Frontier]: Beyond the shortcomings of centralised control and alignment, a new school of thought on AI governance emerges. It still faces tricky politics. | ||

| + | * 2025-05: [https://uncpga.world/agi-uncpga-report/ AGI UNCPGA Report]: Governance of the Transition to Artificial General Intelligence (AGI) Urgent Considerations for the UN General Assembly: Report for the Council of Presidents of the United Nations General Assembly (UNCPGA) | ||

| + | * 2025-06: [https://writing.antonleicht.me/p/ai-and-jobs-politics-without-policy AI & Jobs: Politics without Policy] Political support mounts - for a policy platform that does not yet exist | ||

| + | * 2025-06: [https://x.com/littIeramblings Sarah Hastings-Woodhouse]: [https://drive.google.com/file/d/1mmdHBE6M2yiyL21-ctTuRLNH5xOFjqWm/view Safety Features for a Centralized AGI Project] | ||

| + | * 2025-07: [https://writing.antonleicht.me/p/a-moving-target A Moving Target] Why we might not be quite ready to comprehensively regulate AI, and why it matters | ||

| + | * 2025-07: [https://www-cdn.anthropic.com/0dc382a2086f6a054eeb17e8a531bd9625b8e6e5.pdf Anthropic: Build AI in America] ([https://www.anthropic.com/news/build-ai-in-america blog]) | ||

| + | * 2025-12: [https://asi-prevention.com/ How middle powers may prevent the development of artificial superintelligence] | ||

| + | |||

| + | ==Restriction== | ||

| + | * 2024-05: OpenAI: [https://openai.com/index/reimagining-secure-infrastructure-for-advanced-ai/ Reimagining secure infrastructure for advanced AI] OpenAI calls for an evolution in infrastructure security to protect advanced AI | ||

| + | * 2025-07: MIRI: [https://arxiv.org/abs/2507.09801 Technical Requirements for Halting Dangerous AI Activities] | ||

=See Also= | =See Also= | ||

* [[AI safety]] | * [[AI safety]] | ||

Latest revision as of 09:34, 26 February 2026

Contents

Capability Scaling

- 2019-03: Rich Sutton: The Bitter Lesson

- 2020-09: Ajeya Cotra: Draft report on AI timelines

- 2022-01: gwern: The Scaling Hypothesis

- 2023-05: Richard Ngo: Clarifying and predicting AGI

- 2024-06: Aidan McLaughlin: AI Search: The Bitter-er Lesson

- 2025-03: Measuring AI Ability to Complete Long Tasks Measuring AI Ability to Complete Long Tasks

- 2025-04: Forecaster reacts: METR's bombshell paper about AI acceleration New data supports an exponential AI curve, but lots of uncertainty remains

- 2025-04: AI Digest: A new Moore's Law for AI agents

- 2025-04: Trends in AI Supercomputers (preprint)

- The Road to AGI (timeline visualization)

- 2025-09: The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs

- 2025-09: Failing to Understand the Exponential, Again

- 2026-02: Ryan Greenblatt: Distinguish between inference scaling and "larger tasks use more compute"

Scaling Laws

See: Scaling Laws

AGI Achievable

- Yoshua Bengio: Managing extreme AI risks amid rapid progress

- Leopold Aschenbrenner: Situational Awareness: Counting the OOMs

- Richard Ngo: Visualizing the deep learning revolution

- Katja Grace: Survey of 2,778 AI authors: six parts in pictures

- Epoch AI: Machine Learning Trends

- AI Digest: How fast is AI improving?

- 2025-06: The case for AGI by 2030

AGI Definition

- 2023-11: Allan Dafoe, Shane Legg, et al.: Levels of AGI for Operationalizing Progress on the Path to AGI

- 2024-04: Bowen Xu: What is Meant by AGI? On the Definition of Artificial General Intelligence

- 2025-10: Dan Hendrycks et al.: A Definition of AGI

- 2026-01: On the universal definition of intelligence

Progress Models

From AI Impact Predictions:

Economic and Political

- 2019-11: The Impact of Artificial Intelligence on the Labor Market

- 2020-06: Modeling the Human Trajectory (GDP)

- 2021-06: Report on Whether AI Could Drive Explosive Economic Growth

- 2023-10: Marc Andreessen: The Techno-Optimist Manifesto

- 2023-12: My techno-optimism: "defensive acceleration" (Vitalik Buterin)

- 2024-03: Noah Smith: Plentiful, high-paying jobs in the age of AI: Comparative advantage is very subtle, but incredibly powerful. (video)

- 2024-03: Scenarios for the Transition to AGI (AGI leads to wage collapse)

- 2024-06: Situational Awareness (Leopold Aschenbrenner) - select quotes, podcast, text summary of podcast

- 2024-06: AI and Growth: Where Do We Stand?

- 2024-09: OpenAI Infrastructure is Destiny: Economic Returns on US Investment in Democratic AI

- 2024-12: By default, capital will matter more than ever after AGI (L Rudolf L)

- 2025-01: The Intelligence Curse: With AGI, powerful actors will lose their incentives to invest in people

- Updated 2025-04: The Intelligence Curse (Luke Drago and Rudolf Laine)

- 2025-01: Microsoft: The Golden Opportunity for American AI

- 2025-01: AGI Will Not Make Labor Worthless

- 2025-01: AI in America: OpenAI's Economic Blueprint (blog)

- 2025-01: How much economic growth from AI should we expect, how soon?

- 2025-02: Morgan Stanley: The Humanoid 100: Mapping the Humanoid Robot Value Chain

- 2025-02: The Anthropic Economic Index: Which Economic Tasks are Performed with AI? Evidence from Millions of Claude Conversations

- 2025-02: Strategic Wealth Accumulation Under Transformative AI Expectations

- 2025-02: Tyler Cowen: Why I think AI take-off is relatively slow

- 2025-03: Epoch AI: Most AI value will come from broad automation, not from R&D

- The primary economic impact of AI will be its ability to broadly automate labor

- Automating AI R&D alone likely won’t dramatically accelerate AI progress

- Fully automating R&D requires a very broad set of abilities

- AI takeoff will likely be diffuse and salient

- 2025-03: Anthropic Economic Index: Insights from Claude 3.7 Sonnet

- 2025-04: Will there be extreme inequality from AI?

- 2025-04: Anthropic Economic Index: AI’s Impact on Software Development

- 2025-05: Better at everything: how AI could make human beings irrelevant

- 2025-05: Forethought: The Industrial Explosion

- 2025-05: Ten Principles of AI Agent Economics

- 2025-07: What Economists Get Wrong about AI They ignore innovation effects, use outdated capability assumptions, and miss the robotics revolution

- 2025-07: We Won't Be Missed: Work and Growth in the Era of AGI

- 2025-07: The Economics of Bicycles for the Mind

- 2025-09: Genius on Demand: The Value of Transformative Artificial Intelligence

- 2025-10: AI is probably not a bubble: AI companies have revenue, demand, and paths to immense value

- 2025-11: Thoughts by a non-economist on AI and economics

- 2025-11: Artificial Intelligence, Competition, and Welfare

- 2025-11: Estimating AI productivity gains from Claude conversations (Anthropic)

- 2025-12: How AI-driven feedback loops could make things very crazy, very fast

- 2025-12: Existential Risk and Growth (Philip Trammell and Leopold Aschenbrenner)

- 2026-01: Anthropic Economic Index: new building blocks for understanding AI use

- 2026-01: Anthropic Economic Index report: economic primitives

- 2026-02: Nate Silver: The singularity won't be gentle: If AI is even half as transformational as Silicon Valley assumes, politics will never be the same again

Job Loss

- 2023-03: GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models

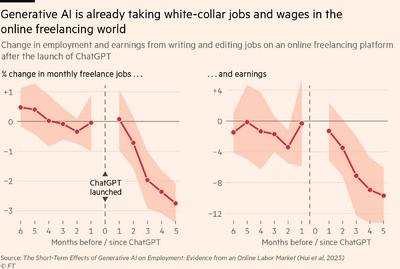

- 2023-08: The Short-Term Effects of Generative Artificial Intelligence on Employment: Evidence from an Online Labor Market

- 2023-09: What drives UK firms to adopt AI and robotics, and what are the consequences for jobs?

- 2023-11: New Analysis Shows Over 20% of US Jobs Significantly Exposed to AI Automation In the Near Future

- 2024-01: Duolingo cuts 10% of its contractor workforce as the company embraces AI

- 2024-02: Gen AI is a tool for growth, not just efficiency: Tech CEOs are investing to build their workforce and capitalise on new opportunities from generative AI. That’s a sharp contrast to how their peers view it.

- 2024-04: AI is Poised to Replace the Entry-Level Grunt Work of a Wall Street Career

- 2024-07: AI Is Already Taking Jobs in the Video Game Industry: A WIRED investigation finds that major players like Activision Blizzard, which recently laid off scores of workers, are using generative AI for game development

- 2024-08: Klarna: AI lets us cut thousands of jobs - but pay more

- 2025-01: AI and Freelancers: Has the Inflection Point Arrived?

- 2025-01: Yes, you're going to be replaced: So much cope about AI

- 2025-03: Will AI Automate Away Your Job? The time-horizon model explains the future of the technology

- 2025-05: It’s Time To Get Concerned, Klarna, UPS, Duolingo, Cisco, And Many Other Companies Are Replacing Workers With AI

- 2025-05: What Happens When AI Replaces Workers?

- 2025-05: Educated but unemployed, a rising reality for US college grads Structural shifts in tech hiring and the growing impact of AI are driving higher unemployment among recent college graduates

- 2025-05: NY Times: For Some Recent Graduates, the A.I. Job Apocalypse May Already Be Here The unemployment rate for recent college graduates has jumped as companies try to replace entry-level workers with artificial intelligence

- 2025-06: How not to lose your job to AI The skills AI will make more valuable (and how to learn them)

- 2025-06: Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce

- 2025-07: Harvard Business Review: What Gets Measured, AI Will Automate

- 2025-08: Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence

- 2025-10: Performance or Principle: Resistance to Artificial Intelligence in the U.S. Labor Market

- 2025-10: The AI Becker problem: Who will train the next generation?

- 2026-01: AI, Automation, and Expertise

- 2026-02: The Jevons Paradox for Intelligence: Fears of AI-induced job loss could not be more wrong

Productivity Impact

- 2025-05: Large Language Models, Small Labor Market Effects

- Significant uptake, but very little economic impact so far

- 2026-02: The AI productivity take-off is finally visible (Erik Brynjolfsson)

- Businesses are finally beginning to reap some of AI's benefits.

- 2026-02: New York Times: The A.I. Disruption We’ve Been Waiting for Has Arrived

National Security

- 2025-04: Jeremie Harris and Edouard Harris: America’s Superintelligence Project

AI Manhattan Project

- 2024-06: Situational Awareness (Leopold Aschenbrenner) - select quotes, podcast, text summary of podcast

- 2024-10: White House Memo calls for action on AI

- 2024-11: 2024 Annual Report to Congress: calls for "Manhattan Project-style" effort

- 2025-05-29: DoE Tweet: "AI is the next Manhattan Project, and THE UNITED STATES WILL WIN. 🇺🇸"

- 2025-07: How big could an “AI Manhattan Project” get?

Near-term

- 2021-08: Daniel Kokotajlo: What 2026 looks like

- 2025-02: Sam Altman: Three Observations

- The intelligence of an AI model roughly equals the log of the resources used to train and run it.

- The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use

- The socioeconomic value of linearly increasing intelligence is super-exponential in nature

- 2025-03: Glimpses of AI Progress: Mental models for fast times

- 2025-03: Navigating artificial general intelligence development: societal, technological, ethical, and brain-inspired pathways

- 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: AI 2027 (pdf)

- 2025-07: Video: Are We 3 Years From AI Disaster? A Rigorous Forecast

- 2025-04: Stanford HAI: Artificial Intelligence Index Report 2025

- 2025-04: Arvind Narayananand Sayash Kapoor: AI as Normal Technology

- 2025-04: Dwarkesh Patel: Questions about the Future of AI

- 2025-05: Trends – Artificial Intelligence

- 2025-06: IdeaFoundry: Evolution vs. Extinction: The Choice is Ours The next 18 months will decide whether AI ends us or evolves us

- 2025-07: Advanced AI: Possible futures Five scenarios for how the AI-transition could unfold

- 2025-11: Android Dreams

- 2026-02: Citrini: The 2028 Global Intelligence Crisis: A Thought Exercise in Financial History, from the Future

Insightful Analysis of Current State

- 2025-11: Andy Masley: The lump of cognition fallacy: The extended mind as the advance of civilization

- 2026-02: Eric Jang: As Rocks May Think

- 2026-02: Matt Shumer: Something Big Is Happening

- 2026-02: Minh Pham: Why Most Agent Harnesses Are Not Bitter Lesson Pilled

Overall

- 1993: Vernor Vinge: The Coming Technological Singularity: How to Survive in the Post-Human Era

- 2025-03: Kevin Roose (New York Times): Powerful A.I. Is Coming. We’re Not Ready. Three arguments for taking progress toward artificial general intelligence, or A.G.I., more seriously — whether you’re an optimist or a pessimist.

- 2025-03: Nicholas Carlini: My Thoughts on the Future of "AI": "I have very wide error bars on the potential future of large language models, and I think you should too."

- 2025-06: Sam Altman: The Gentle Singularity

Surveys of Opinions/Predictions

- 2016-06: 2016 Expert Survey on Progress in AI

- 2022-10: Forecasting Research Institute: Assessing Near-Term Accuracy in the Existential Risk Persuasion Tournament

- 2025-09: Ethan Mollick: Progress is ahead of expectations

- 2023-08: 2023 Expert Survey on Progress in AI

- 2024-01: Thousands of AI Authors on the Future of AI

- 2025-02: Why do Experts Disagree on Existential Risk and P(doom)? A Survey of AI Experts

- 2025-02: Nicholas Carlini: AI forecasting retrospective: you're (probably) over-confident

- 2025-04: Helen Toner: "Long" timelines to advanced AI have gotten crazy short

- 2025-05: AI 2025 Forecasts - May Update

- 2026-02: Lay beliefs about the badness, likelihood, and importance of human extinction

Bad Outcomes

- List of p(doom) values

- 2019-03: What failure looks like

- 2023-03: gwern: It Looks Like You’re Trying To Take Over The World

- 2025-01: Gradual Disempowerment: Systemic Existential Risks from Incremental AI Development (web version)

- 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: AI 2027 (pdf)

- 2025-04: AI-Enabled Coups: How a Small Group Could Use AI to Seize Power

- 2025-09: The three main doctrines on the future of AI

- Dominance doctrine: First actor to create advanced AI will attain overwhelming strategic superiority

- Extinction doctrine: Humanity will lose control of ASI, leading to extinction or permanent disempowerment

- Replacement doctrine: AI will automate human tasks, but without fundamentally reshaping or ending civilization

- 2025-09: Sean ÓhÉigeartaigh: Extinction of the human species: What could cause it and how likely is it to occur?

Intelligence Explosion

- 2023-06: What a Compute-Centric Framework Says About Takeoff Speeds

- 2025-02: Three Types of Intelligence Explosion

- 2025-03: Future of Life Institute: Are we close to an intelligence explosion? AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable.

- 2025-03: Forethought: Will AI R&D Automation Cause a Software Intelligence Explosion?

- 2025-05: The Last Invention Why Humanity’s Final Creation Changes Everything

- 2025-08: How quick and big would a software intelligence explosion be?

Superintelligence

- 2024-10: How Smart will ASI be?

- 2024-11: Concise Argument for ASI Risk

- 2025-03: Limits of smart

- 2025-05: The Limits of Superintelligence

Long-range/Philosophy

- 2023-03: Dan Hendrycks: Natural Selection Favors AIs over Humans

Psychology

Positives & Optimism

Science & Technology Improvements

- 2023-05: Kelsey Piper: The costs of caution

- 2024-09: Sam Altman: The Intelligence Age

- 2024-10: Dario Amodei: Machines of Loving Grace

- 2024-11: Google DeepMind: A new golden age of discovery

- 2025-03: Fin Moorhouse, Will MacAskill: Preparing for the Intelligence Explosion

Social

- 2025-09: Coasean Bargaining at Scale: Decentralization, coordination, and co-existence with AGI

- 2025-10: The Coasean Singularity? Demand, Supply, and Market Design with AI Agents

Post-scarcity Society

- 2004: Eliezer Yudkowsky (MIRI): Coherent Extrapolated Volition and Fun Theory

- 2019: John Danaher: Automation and Utopia: Human Flourishing in a World Without Work

The Grand Tradeoff

- 2026-02: Nick Bostrom: Optimal Timing for Superintelligence: Mundane Considerations for Existing People

Plans

- A Narrow Path: How to Secure our Future

- Marius Hobbhahn: What’s the short timeline plan?

- Building CERN for AI: An institutional blueprint

- AGI, Governments, and Free Societies

- Control AI: The Direct Institutional Plan

- Luke Drago and L Rudolf L: The use of knowledge in (AGI) society: How to build to break the intelligence curse

- AGI Social Contract

- Yoshua Bengio: A Potential Path to Safer AI Development

- 2026-01: Dario Amodei: The Adolescence of Technology: Confronting and Overcoming the Risks of Powerful AI

- 2026-02: Ryan Greenblatt: How do we (more) safely defer to AIs?

Philosophy

- Dan Faggella:

- Joe Carlsmith: 2024: Otherness and control in the age of AGI

- Anthony Aguirre:

- 2025-04: Scott Alexander (Astral Codex Ten): The Colors Of Her Coat (response to semantic apocalypse and semantic satiation)

- 2025-05: Helen Toner: We’re Arguing About AI Safety Wrong: Dynamism vs. stasis is a clearer lens for criticizing controversial AI safety prescriptions

- 2025-05: Joe Carlsmith: The stakes of AI moral status

Research

Alignment

- 2023-03: Leopold Aschenbrenner: Nobody’s on the ball on AGI alignment

- 2024-03: What are human values, and how do we align AI to them? (blog)

- 2025: Joe Carlsmith: How do we solve the alignment problem? Introduction to an essay series on paths to safe, useful superintelligence

- What is it to solve the alignment problem? Also: to avoid it? Handle it? Solve it forever? Solve it completely? (audio version)

- When should we worry about AI power-seeking? (audio version)

- Paths and waystations in AI safety (audio version)

- AI for AI safety (audio version)

- Can we safely automate alignment research? (audio version, video version)

- Giving AIs safe motivations (audio version)

- Controlling the options AIs can pursue (audio version)

- How human-like do safe AI motivations need to be? (audio version)

- Building AIs that do human-like philosophy: AIs will face philosophical questions humans can't answer for them (audio version)

- 2025-04: Dario Amodei: The Urgency of Interpretability

Strategic/Technical

- 2025-03: AI Dominance Requires Interpretability and Standards for Transparency and Security

- 2026-02: Fundamental Development Gap Map v1.0

Strategic/Policy

- 2015-03: Sam Altman: Machine intelligence, part 2

- 2019-07: Amanda Askell, Miles Brundage, Gillian Hadfield: The Role of Cooperation in Responsible AI Development

- 2025-03: Dan Hendrycks, Eric Schmidt, Alexandr Wang: Superintelligence Strategy

- Anthony Aguirre: Keep The Future Human (essay)

- The 4 Rules That Could Stop AI Before It’s Too Late (video) (2025)

- Oversight: Registration required for training >1025 FLOP and inference >1019 FLOP/s (~1,000 B200 GPUs @ $25M). Build cryptographic licensing into hardware.

- Computation Limits: Ban on training models >1027 FLOP or inference >1020 FLOP/s.

- Strict Liability: Hold AI companies responsible for outcomes.

- Tiered Regulation: Low regulation on tool-AI, strictest regulation on AGI (general, capable, autonomous systems).

- The 4 Rules That Could Stop AI Before It’s Too Late (video) (2025)

- 2025-04: Helen Toner: Nonproliferation is the wrong approach to AI misuse

- 2025-04: MIRI: AI Governance to Avoid Extinction: The Strategic Landscape and Actionable Research Questions

- 2025-05: The New AI Policy Frontier: Beyond the shortcomings of centralised control and alignment, a new school of thought on AI governance emerges. It still faces tricky politics.

- 2025-05: AGI UNCPGA Report: Governance of the Transition to Artificial General Intelligence (AGI) Urgent Considerations for the UN General Assembly: Report for the Council of Presidents of the United Nations General Assembly (UNCPGA)

- 2025-06: AI & Jobs: Politics without Policy Political support mounts - for a policy platform that does not yet exist

- 2025-06: Sarah Hastings-Woodhouse: Safety Features for a Centralized AGI Project

- 2025-07: A Moving Target Why we might not be quite ready to comprehensively regulate AI, and why it matters

- 2025-07: Anthropic: Build AI in America (blog)

- 2025-12: How middle powers may prevent the development of artificial superintelligence

Restriction

- 2024-05: OpenAI: Reimagining secure infrastructure for advanced AI OpenAI calls for an evolution in infrastructure security to protect advanced AI

- 2025-07: MIRI: Technical Requirements for Halting Dangerous AI Activities