Difference between revisions of "AI predictions"

KevinYager (talk | contribs) (→Alignment) |

KevinYager (talk | contribs) (→Intelligence Explosion) |

||

| Line 124: | Line 124: | ||

* 2025-03: Future of Life Institute: [https://futureoflife.org/ai/are-we-close-to-an-intelligence-explosion/ Are we close to an intelligence explosion?] AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable. | * 2025-03: Future of Life Institute: [https://futureoflife.org/ai/are-we-close-to-an-intelligence-explosion/ Are we close to an intelligence explosion?] AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable. | ||

* 2025-03: Forethought: [https://www.forethought.org/research/will-ai-r-and-d-automation-cause-a-software-intelligence-explosion Will AI R&D Automation Cause a Software Intelligence Explosion?] | * 2025-03: Forethought: [https://www.forethought.org/research/will-ai-r-and-d-automation-cause-a-software-intelligence-explosion Will AI R&D Automation Cause a Software Intelligence Explosion?] | ||

| + | |||

| + | ==Superintelligence== | ||

| + | * 2024-11: [http://yager-research.ca/2024/11/concise-argument-for-asi-risk/ Concise Argument for ASI Risk] | ||

| + | * 2025-03: [https://dynomight.net/smart/ Limits of smart] | ||

| + | * 2025-05: [https://timfduffy.substack.com/p/the-limits-of-superintelligence?manualredirect= The Limits of Superintelligence] | ||

==Long-range/Philosophy== | ==Long-range/Philosophy== | ||

Revision as of 10:48, 27 May 2025

Contents

Capability Scaling

- 2019-03: Rich Sutton: The Bitter Lesson

- 2020-09: Ajeya Cotra: Draft report on AI timelines

- 2022-01: gwern: The Scaling Hypothesis

- 2023-05: Richard Ngo: Clarifying and predicting AGI

- 2024-06: Aidan McLaughlin: AI Search: The Bitter-er Lesson

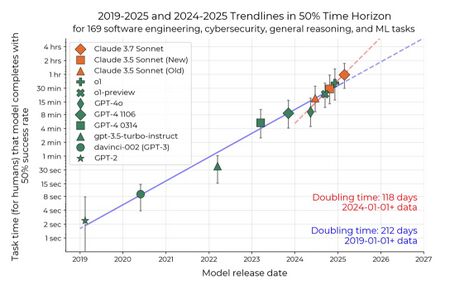

- 2025-03: Measuring AI Ability to Complete Long Tasks Measuring AI Ability to Complete Long Tasks

- 2025-04: Forecaster reacts: METR's bombshell paper about AI acceleration New data supports an exponential AI curve, but lots of uncertainty remains

- 2025-04: AI Digest: A new Moore's Law for AI agents

- 2025-04: Trends in AI Supercomputers

- The Road to AGI (timeline visualization)

AGI Achievable

- Yoshua Bengio: Managing extreme AI risks amid rapid progress

- Leopold Aschenbrenner: Situational Awareness: Counting the OOMs

- Richard Ngo: Visualizing the deep learning revolution

- Katja Grace: Survey of 2,778 AI authors: six parts in pictures

- Epoch AI: Machine Learning Trends

- AI Digest: How fast is AI improving?

AGI Definition

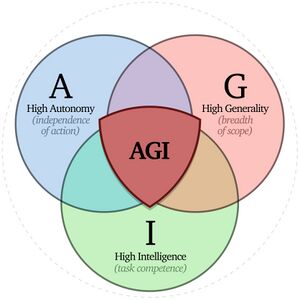

- 2023-11: Allan Dafoe, Shane Legg, et al.: Levels of AGI for Operationalizing Progress on the Path to AGI

- 2024-04: Bowen Xu: What is Meant by AGI? On the Definition of Artificial General Intelligence

Progress Models

Economic and Political

- 2019-11: The Impact of Artificial Intelligence on the Labor Market

- 2023-10: Marc Andreessen: The Techno-Optimist Manifesto

- 2023-12: My techno-optimism: "defensive acceleration" (Vitalik Buterin)

- 2024-03: Noah Smith: Plentiful, high-paying jobs in the age of AI: Comparative advantage is very subtle, but incredibly powerful. (video)

- 2024-03: Scenarios for the Transition to AGI (AGI leads to wage collapse)

- 2024-06: Situational Awareness (Leopold Aschenbrenner) - select quotes, podcast, text summary of podcast

- 2024-06: AI and Growth: Where Do We Stand?

- 2024-09: OpenAI Infrastructure is Destiny: Economic Returns on US Investment in Democratic AI

- 2024-12: By default, capital will matter more than ever after AGI (L Rudolf L)

- 2025-01: The Intelligence Curse: With AGI, powerful actors will lose their incentives to invest in people

- Updated 2025-04: The Intelligence Curse (Luke Drago and Rudolf Laine)

- 2025-01: Microsoft: The Golden Opportunity for American AI

- 2025-01: AGI Will Not Make Labor Worthless

- 2025-01: AI in America: OpenAI's Economic Blueprint (blog)

- 2025-01: How much economic growth from AI should we expect, how soon?

- 2025-02: Morgan Stanley: The Humanoid 100: Mapping the Humanoid Robot Value Chain

- 2025-02: The Anthropic Economic Index: Which Economic Tasks are Performed with AI? Evidence from Millions of Claude Conversations

- 2025-02: Strategic Wealth Accumulation Under Transformative AI Expectations

- 2025-02: Tyler Cowen: Why I think AI take-off is relatively slow

- 2025-03: Epoch AI: Most AI value will come from broad automation, not from R&D

- The primary economic impact of AI will be its ability to broadly automate labor

- Automating AI R&D alone likely won’t dramatically accelerate AI progress

- Fully automating R&D requires a very broad set of abilities

- AI takeoff will likely be diffuse and salient

- 2025-03: Anthropic Economic Index: Insights from Claude 3.7 Sonnet

- 2025-04: Will there be extreme inequality from AI?

- 2025-04: Anthropic Economic Index: AI’s Impact on Software Development

- 2025-05: Better at everything: how AI could make human beings irrelevant

Job Loss

- 2023-03: GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models

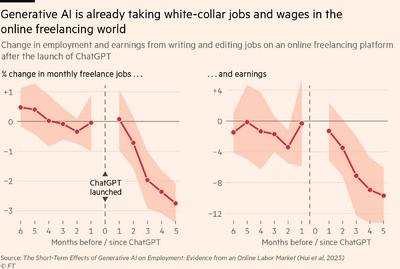

- 2023-08: The Short-Term Effects of Generative Artificial Intelligence on Employment: Evidence from an Online Labor Market

- 2023-09: What drives UK firms to adopt AI and robotics, and what are the consequences for jobs?

- 2023-11: New Analysis Shows Over 20% of US Jobs Significantly Exposed to AI Automation In the Near Future

- 2024-01: Duolingo cuts 10% of its contractor workforce as the company embraces AI

- 2024-02: Gen AI is a tool for growth, not just efficiency: Tech CEOs are investing to build their workforce and capitalise on new opportunities from generative AI. That’s a sharp contrast to how their peers view it.

- 2024-04: AI is Poised to Replace the Entry-Level Grunt Work of a Wall Street Career

- 2024-07: AI Is Already Taking Jobs in the Video Game Industry: A WIRED investigation finds that major players like Activision Blizzard, which recently laid off scores of workers, are using generative AI for game development

- 2024-08: Klarna: AI lets us cut thousands of jobs - but pay more

- 2025-01: AI and Freelancers: Has the Inflection Point Arrived?

- 2025-01: Yes, you're going to be replaced: So much cope about AI

- 2025-03: Will AI Automate Away Your Job? The time-horizon model explains the future of the technology

- 2025-05: It’s Time To Get Concerned, Klarna, UPS, Duolingo, Cisco, And Many Other Companies Are Replacing Workers With AI

National Security

- 2025-04: Jeremie Harris and Edouard Harris: America’s Superintelligence Project

Near-term

- 2021-08: Daniel Kokotajlo: What 2026 looks like

- 2025-02: Sam Altman: Three Observations

- The intelligence of an AI model roughly equals the log of the resources used to train and run it.

- The cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use

- The socioeconomic value of linearly increasing intelligence is super-exponential in nature

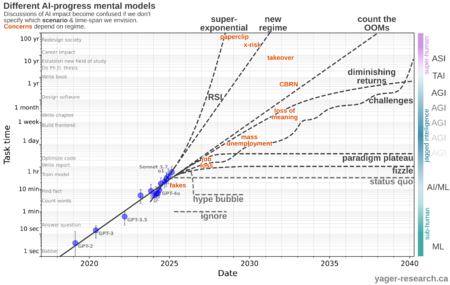

- 2025-03: Glimpses of AI Progress: Mental models for fast times

- 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: AI 2027 (pdf)

- 2025-04: Stanford HAI: Artificial Intelligence Index Report 2025

- 2025-04: Arvind Narayananand Sayash Kapoor: AI as Normal Technology

- 2025-04: Dwarkesh Patel: Questions about the Future of AI

Overall

- 2025-03: Kevin Roose (New York Times): Powerful A.I. Is Coming. We’re Not Ready. Three arguments for taking progress toward artificial general intelligence, or A.G.I., more seriously — whether you’re an optimist or a pessimist.

- 2025-03: Nicholas Carlini: My Thoughts on the Future of "AI": "I have very wide error bars on the potential future of large language models, and I think you should too."

Surveys of Opinions/Predictions

- 2016-06: 2016 Expert Survey on Progress in AI

- 2023-08: 2023 Expert Survey on Progress in AI

- 2024-01: Thousands of AI Authors on the Future of AI

- 2025-02: Why do Experts Disagree on Existential Risk and P(doom)? A Survey of AI Experts

- 2025-02: Nicholas Carlini: AI forecasting retrospective: you're (probably) over-confident

- 2025-04: Helen Toner: "Long" timelines to advanced AI have gotten crazy short

- 2025-05: AI 2025 Forecasts - May Update

Bad Outcomes

- List of p(doom) values

- 2019-03: What failure looks like

- 2023-03: gwern: It Looks Like You’re Trying To Take Over The World

- 2025-01: Gradual Disempowerment: Systemic Existential Risks from Incremental AI Development (web version)

- 2025-04: Daniel Kokotajlo, Scott Alexander, Thomas Larsen, Eli Lifland, Romeo Dean: AI 2027 (pdf)

- 2025-04: AI-Enabled Coups: How a Small Group Could Use AI to Seize Power

Intelligence Explosion

- 2025-02: Three Types of Intelligence Explosion

- 2025-03: Future of Life Institute: Are we close to an intelligence explosion? AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable.

- 2025-03: Forethought: Will AI R&D Automation Cause a Software Intelligence Explosion?

Superintelligence

- 2024-11: Concise Argument for ASI Risk

- 2025-03: Limits of smart

- 2025-05: The Limits of Superintelligence

Long-range/Philosophy

- 2023-03: Dan Hendrycks: Natural Selection Favors AIs over Humans

Psychology

Science & Technology Improvements

- 2024-09: Sam Altman: The Intelligence Age

- 2024-10: Dario Amodei: Machines of Loving Grace

- 2024-11: Google DeepMind: A new golden age of discovery

- 2025-03: Fin Moorhouse, Will MacAskill: Preparing for the Intelligence Explosion

Plans

- A Narrow Path: How to Secure our Future

- Marius Hobbhahn: What’s the short timeline plan?

- Building CERN for AI: An institutional blueprint

- AGI, Governments, and Free Societies

- Control AI: The Direct Institutional Plan

- Luke Drago and L Rudolf L: The use of knowledge in (AGI) society: How to build to break the intelligence curse

- AGI Social Contract

- Yoshua Bengio: A Potential Path to Safer AI Development

Philosophy

- Dan Faggella:

- Joe Carlsmith: 2024: Otherness and control in the age of AGI

- Anthony Aguirre:

- 2025-04: Scott Alexander (Astral Codex Ten): The Colors Of Her Coat (response to semantic apocalypse and semantic satiation)

- 2025-05: Helen Toner: We’re Arguing About AI Safety Wrong: Dynamism vs. stasis is a clearer lens for criticizing controversial AI safety prescriptions

Alignment

- 2023-03: Leopold Aschenbrenner: Nobody’s on the ball on AGI alignment

- 2024-03: What are human values, and how do we align AI to them? (blog)

- 2025: Joe Carlsmith: How do we solve the alignment problem? Introduction to an essay series on paths to safe, useful superintelligence

- What is it to solve the alignment problem? Also: to avoid it? Handle it? Solve it forever? Solve it completely? (audio version)

- When should we worry about AI power-seeking? (audio version)

- Paths and waystations in AI safety (audio version)

- AI for AI safety (audio version)

- Can we safely automate alignment research? (audio version, video version)

- The stakes of AI moral status

- 2025-04: Dario Amodei: The Urgency of Interpretability

Strategic/Technical

Strategic/Policy

- 2015-03: Sam Altman: Machine intelligence, part 2

- 2019-07: Amanda Askell, Miles Brundage, Gillian Hadfield: The Role of Cooperation in Responsible AI Development

- 2025-03: Dan Hendrycks, Eric Schmidt, Alexandr Wang: Superintelligence Strategy

- Anthony Aguirre: Keep The Future Human (essay)

- The 4 Rules That Could Stop AI Before It’s Too Late (video) (2025)

- Oversight: Registration required for training >1025 FLOP and inference >1019 FLOP/s (~1,000 B200 GPUs @ $25M). Build cryptographic licensing into hardware.

- Computation Limits: Ban on training models >1027 FLOP or inference >1020 FLOP/s.

- Strict Liability: Hold AI companies responsible for outcomes.

- Tiered Regulation: Low regulation on tool-AI, strictest regulation on AGI (general, capable, autonomous systems).

- The 4 Rules That Could Stop AI Before It’s Too Late (video) (2025)

- 2025-04: Helen Toner: Nonproliferation is the wrong approach to AI misuse

- 2025-04: MIRI: AI Governance to Avoid Extinction: The Strategic Landscape and Actionable Research Questions

- 2025-05: The New AI Policy Frontier: Beyond the shortcomings of centralised control and alignment, a new school of thought on AI governance emerges. It still faces tricky politics.